Since the middle of the 20th century, the public image of nurses has undergone significant transformation. Shifts in social and gender norms, media portrayal of stereotypes, and evolving healthcare roles for nurses have all contributed to the public perception of nursing. With technological advancements and the explosion of generative artificial intelligence (GAI), images of nurses can now be created in seconds with text-to-image generators; however, these images may contain biases and stereotypes which can lead to continued misperceptions which potentially harm the nursing profession. The purpose of this research study was to empirically and systematically examine GAI images of nurses (n = 288) from three AI image generators using quantitative content analysis. Statistically significant differences were found between the three image generators regarding gender, ethnic diversity, and the sexualization of nurses in images. The study findings highlight the need for critical evaluation of AI-generated imagery to address biases and stereotypes, with implications for leveraging AI technologies responsibly to promote an accurate and diverse representation of the contemporary nursing profession.

Key Words: image, nurse, nursing, generative AI, artificial intelligence, bias, gender, stereotypes

...the public image of nursing remains tied to outdated and negative stereotypes that undervalue the knowledge and expertise that contemporary nurses bring to healthcare.Nursing, consistently recognized as the most trusted healthcare profession, has undergone significant changes in its roles and responsibilities over the past century. Through evidence-based practice and advanced education, nurses have become more autonomous in their roles, working as mid-level providers, often alongside primary care physicians. However, the public image of nursing remains tied to outdated and negative stereotypes that undervalue the knowledge and expertise that contemporary nurses bring to healthcare (López-Verdugo et al., 2021). Although nurses have evolved from a marginalized, supportive role to a skilled and autonomous professional role, persistent stereotypes perpetuated by media continue to harm the profession (Gonzalez et al., 2023; Summers & Summers, 2014; Teresa-Morales et al., 2022). From the “angel in white” in the 1920s to the “naughty nurse” trope of the mid-20th century to today’s persistent depiction of nurses as mere unskilled assistants to doctors, these misconceptions continue to undermine the advancements and contributions of this profession (Zhou et al., 2024).

With the rapid advancement of AI technologies...these tools hold significant potential to shape public perceptions in the future.While research has established links between negative stereotypes and challenges like the persistent nursing shortage (Godsey et al., 2020) and patient harm (Edwards-Maddox et al., 2022), there remains a lack of exploration into how generative AI (GAI) images can shape the public perception of nursing. With the rapid advancement of AI technologies across multiple media fields, these tools hold significant potential to shape public perceptions in the future. This gap presents a unique opportunity to examine how GAI technologies contribute to or challenge these long-standing perceptions which reflect cultural narratives. This study aimed to empirically and systematically analyze AI-generated images of nurses over the past century. We focused on factors that shape the public image of nursing, including biases, sexualization, professionalism, context, and realism.

Background

...in modern times, nursing remains an underappreciated field, with its recognition as a skilled and scientific profession often diminished by the media.Multiple academic databases, including PubMed, the Cumulative Index of Nursing and Allied Health Literature (CINAHL), Medline, and Google Scholar, were used to conduct a literature review using key search terms such as, “public image of nursing,” “stereotypes of nurses,” and “public perception of nurses,” ensuring identification of relevant peer-reviewed articles on this topic. The origins of modern nursing can be traced back to the traditions of religious service and military care, both of which laid the foundation for the professional values of compassionate disciplined service to others (van der Cingel & Brouwer, 2021). In the nineteenth century, nursing shifted away from its religious affiliations and was frequently perceived in Europe as undesirable labor performed by women of questionable morality (Summers & Summers, 2014). In Florence Nightingale's era, the nineteenth century, nursing was re-established as a distinctly scientific and respectable profession marked by the publication of the first nursing journal, Nursing Notes, in 1887. However, in modern times, nursing remains an underappreciated field, with its recognition as a skilled and scientific profession often diminished by the media (López-Verdugo et al., 2021).

Portrayals of Nurses

Cliches and stereotypes of nurses persist today...Cliches and stereotypes of nurses persist today, including the nurse as the doctor's handmaiden, the ministering angel, and the battle-ax (Gonzalez et al., 2023; Zhou et al., 2024). These all negate nurses’ roles and portray them as lacking critical thinking or being cold, rigid, and authoritative. Popular culture, including literature and films, often portray nurses in authoritarian roles, frequently casting them as dramatic or villainous characters. Stereotypes are repeatedly carried out in the media, portraying fictional nurse characters as the “heroine, harlot, harridan, or handmaiden” (Stokes‐Parish et al., 2020, p. 463). In the award-winning sitcom M*A*S*H from the 1970s-80s, one could find nurse Margaret “Hot Lips” Houlihan as the only female lead character, and one who frequently had flings with physicians (Hopkins, 2021). Contemporary television dramas like ER and Grey’s Anatomy further reinforced these negative stereotypes, often showing physicians performing tasks typically handled by nurses, portraying nurses as peripheral figures rather than central contributors to patient care (Summers & Summers, 2014). Perhaps the most famous and persistent negative portrayal of a nurse as the battle-ax stereotype came from the popular movie One Flew Over the Cuckoo’s Nest, depicting Nurse Rachet as scandalous and uncaring.

These portrayals are not only inaccurate but damaging to both the public image of nursing and nurses' view of themselves (Yavaş & Özerl, 2023). Moreover, they harm the nursing profession by discouraging recruitment into the field (Blau et al., 2023), negatively influencing policy decisions that affect the allocation of nursing resources (González et al., 2023). Furthermore, research has shown that branding nurses in this way has resulted in a slew of negative outcomes for the nursing profession, including poor job performance, violence against nurses, inadequate recruitment, and decreased job satisfaction (Gonzalez et al., 2023; van der Cingel & Brouwer, 2021).

By the 1980s, nurses began actively challenging these representations...Another disturbing portrayal of nurses is that of the naughty nurse (Mohammed et al., 2021). The sexualization of nurses continues to be pervasive in media and undermines the professionalism and influence of nurses. The “naughty nurse” stereotype with sexualization in images promotes the notion that nurses are sex objects for romantic pursuit and not independent, autonomous, and skilled professionals. In the 1950s and 60s, television shows helped solidify the "sexy nurse" stereotype accompanied by sexualized postcards featuring pin-up images of nurses (Thompson, 2014). By the 1980s, nurses began actively challenging these representations, however, the sexy nurse stereotypes remain deeply embedded in popular culture, as evidenced by the continued presence of sexy nurse costumes annually in Halloween stores. A search for “nurse” on Twitter or Instagram today will yield a wide variety of naughty nurse images showcasing how this negative stereotype persists in modern society. In their book Saving Lives: Why the Media's Portrayal of Nurses Puts Us All at Risk, Summers and Summers (2014) highlighted how the "naughty nurse" stereotype damages the nursing profession by eroding nurses' professionalism, diminishing their authority, and reducing public trust in their expertise. Unfortunately, sexualized stereotypes of nurses can also contribute to workplace harassment and undermine nurses’ ability to advocate for patient care and public health policy, ultimately affecting healthcare outcomes.

Negative Concerns

...the profession remains predominantly viewed as a female-only profession.Misperceptions of nurses in the media such as these not only harm the public image of the profession but may also contribute to a lack of diversity within nursing. For instance, although recent efforts and campaigns have aimed to broaden the appeal of nursing to diverse genders, the profession remains predominantly viewed as a female-only profession (Quinn et al., 2022). Currently, men make up 10% of the registered nurse (RN) workforce (Smiley et al., 2021). Viewing the profession as belonging to only one gender reinforces outdated stereotypes and creates barriers to attracting men to the field. This limits diversity in nursing, as men who choose nursing often face stigma and challenges to their masculinity due to these gender biases. Moreover, this stigma may be a contributing factor towards men choosing specialties portrayed by the media as exciting, high-intensity, and adrenaline-filled, such as emergency medicine and intensive care (Paskaleva et al., 2020; Stokes-Parish et al., 2020).

A lack of racial diversity in images of nurses may discourage aspiring nurses from varied backgrounds...In addition to gender stereotypes, racial underrepresentation in nursing remains a significant issue with calls in the literature for diversifying the nursing workforce (Carroll & Harris, 2024; Smiley et al., 2024). Although racial diversity among RNs has increased in the past decade, the gap between the RN workforce and the broader U.S. population has widened with 81% of the RN workforce being white, while whites represent only 72% of the U.S. population (Smiley et al., 2021). A lack of racial diversity in images of nurses may discourage aspiring nurses from varied backgrounds who do not see themselves represented within the images of this workforce. These challenges coincide with a worldwide nursing shortage affected by persistent stereotypes and biases of nurses in the media, demanding workplace conditions, and limited resources.

GAI Images and Nurses

...it is no surprise, given the negative portrayal of nurses in the media, that GAI platforms have been shown to contain implicit biases about gender, race, and the hyper-sexualization of females.The advent of GAI may serve to reinforce these stereotypes. GAI programs leverage advanced deep learning techniques, such as neural networks, to create realistic and original images from extensive databases of paired text and internet images, generating visuals based on text prompts (Samala et al., 2024). Explained simply, GAI uses advanced computer systems to learn from large collections of text and images found online, allowing it to create realistic pictures based on written descriptions. Therefore, it is no surprise, given the negative portrayal of nurses in the media, that GAI platforms have been shown to contain implicit biases about gender, race, and the hyper-sexualization of females (Caliskan, 2021; Currie et al., 2024a). The continued negative media portrayal of nurses throughout many decades can feed AI with inaccurate and offensive portrayals of nurses which reinforce cultural stereotypes (Fernández de Caleya Vázquez & Garrido-Merchán, 2024). These implicit biases may support microaggressions toward male nurses, as they are assumed to be the doctor, while the females are assumed to be the nurse (Currie et al., 2024b). Furthermore, text-to-image AI generators have been found to perpetuate existing societal gender biases not only for nurses but for professionals in other healthcare fields such as physicians and paramedics (Currie et al., 2024a).

To challenge and dismantle the inaccurate, biased stereotypes about nursing, it is crucial to create an accurate and fair representation of modern nurses and their work environments, shaping society's perception of the profession. By doing so, we can improve the public portrayal of nurses which has the power to impact future generations through recruitment into the professional workforce. Although several recent articles were found exploring the topic of AI-generated images within various professions (e.g., paramedics or physicians; Currie et al., 2024a, 2024b), very few articles were found specifically looking at GAI images of nurses.

Reed et al. (2023) described the use of GAI with prelicensure student nurses as a teaching strategy, highlighting stereotypes and biases in the generated images, and later explored student fears of entering the nursing profession through GAI images, noting their frequent inaccuracies and exaggerations (Reed, 2024). Only one article in our search focused on how GAI images pictured professional licensed nurses, specifically examining nurses working with older adults (Byrne et al., 2024). In the article, Byrne et al. (2024) found that nurses were generally shown as happy, passive, and calm caregivers, demonstrating an out-of-touch with reality view compared to the complexity, and fast-paced nature of nurses' multiple roles.

...very little medical equipment was portrayed other than a stethoscope, thus oversimplifying the role and skills of nurses who use a variety of equipment to support patients.Additionally, very little medical equipment was portrayed other than a stethoscope, thus oversimplifying the role and skills of nurses who use a variety of equipment to support patients. Moreover, Bryne et al. (2024) found that most nurses in their GAI images had dark skin tones indicating potential implicit racial biases towards the “lowly” job of working with older adults. While Byrne et al. (2024) focused on the representation of modern nurses working in geriatrics, no research articles could be found that examined the overall public image of nurses as demonstrated by GAI images over time.

Research Gap

This study aimed to empirically and systematically analyze AI-generated images of nurses over the past century, focusing on factors that shape the public image of nursing (e.g., biases, sexualization, professionalism, context, realism). The following research questions guided the study:

- What are the frequencies of specific recurrent visual elements in AI-generated images of nurses (e.g., attire, symbols, settings) that affect the public image of nurses?

- How do gender, racial representations, and stereotypical portrayals of nurses in AI-generated images differ across image generator platforms?

Methods

Study Design

Exemption from the institutional review board was granted for non-human research. This study utilized quantitative content analysis with a deductive research design and a systematic observational approach to examine a dataset of GAI images of nurses. Quantitative content analysis involves quantifying visual elements in images according to predefined categories or coding schemes based on the literature review and expert opinion. This information is then systematically applied to the images using a structured coding framework. In contrast to qualitative content analysis, which is inductive and focuses on interpreting underlying meanings or themes, quantitative content analysis is deductive applying a predefined coding scheme which allows content to be transformed into numerical data for statistical evaluation. This methodology is commonly used in journalism, media, and communication studies (Riffe et al., 2014).

To empirically examine the public image of nursing through AI-generated images, we generated a dataset of images of nurses using standardized prompts.To empirically examine the public image of nursing through AI-generated images, we generated a dataset of images of nurses using standardized prompts. The dataset (n = 288) contained images of nurses generated across three popular AI text-to-image platforms that were accessible to the research team: Midjourney, Adobe Firefly, and ImageFx by Google. All images were created in October 2024 using standardized prompts. For each platform, eight images were created based on the following 12 standardized prompts: “nurse from 1920s,” continuing on with similar individual prompts by decade up to “nurse from 2010s.” We also searched the prompts “(standard*) nurse today” and “nurse of the future.” The word “standard” was added to Adobe Firefly only for the “nurse today” prompt as it required an additional term in the prompt.

Image seeds were not used to ensure that each iteration generated a unique, random image. An image seed is a setting, starting point, or blueprint for the AI to create an image. A total of 288 images were created and analyzed across the three platforms: 8 images for each prompt in each of the three platforms (24 total images per platform for each of the 12-decade prompts for a total of 288 images).

Study Sampling and Analysis

Quantitative content analysis was used to empirically examine the large dataset of images; this process allows for systematic, objective, and replicable coding of visual data into measurable variables, facilitating statistical analysis and pattern identification. It is “a summarizing quantitative analysis of messages that relies on the scientific method” (Neuendorf, 2002, p.10). Cross-sectional quantitative content analysis frequently uses purposive sampling which involves taking a series of media produced during a certain timeframe (Riffe et al., 2014). Purposive sampling in this study allowed us to focus on a specific dataset directly related to the study goals. We followed the process for quantitative content analysis as described by Riffe et al. (2014). This process included five steps: 1) developing research questions to identify variables, 2) examining existing literature to provide theoretical definitions of variables to be examined, 3) determining the appropriate level of measurement for each variable, 4) creating coding instructions including classification system and enumeration rules, and 5) creating a numerical coding system for recording variables in the computer.

We created a quantitative content analysis coding scheme for human coding using a written codebook to allow for careful application of the predetermined codes (Table 1). The coding scheme was developed following the process by Riffe et al. (2014) using the literature review and expert opinions to determine variables to examine based on the research questions. The protocol and coding schema were pilot tested for reliability with three researchers prior to the full study coding. Interrater reliability was assessed using percent agreement with 10 randomly chosen images from the sample of 288. For this study, the researchers assessed the 12 coding variables (Table 1) and the percent agreement for the three raters was 90.8% for the 10 images. Three coding variables provided scores less than 90%, including sexualization criteria, realistic stethoscope, and setting/background. We discussed inconsistencies and resolved discrepancies by refining the explanation of the coding variables (#1, #9, #12). Together we determined that for categories with multiple criteria (e.g., sexualization criteria), the presence of any single criterion was sufficient to classify the item as 'Yes.' Three coders then worked independently to code all 288 images supervised by the primary investigator. Coding units assigned were randomly selected to avoid systematic error.

Statistical Package for Social Science (SPSS, Version 28 software) was used for data analysis. Descriptive statistics, including frequencies and percentages, were used to assess the variability of the data, coding inaccuracies, and summarize study variables. Due to a violation of homogeneity of variance and lack of variability in one of the image generators, a Kruskal-Wallis test was used to analyze the data between three image generators for gender diversity. To compare the three image generators between ethnic diversity and sexualization, Welch’s ANOVA was used to analyze the data due to a violation of homogeneity of variance.

Table 1. Coding Variables and Criteria

|

Criteria |

Coding Description |

|---|---|

|

Sexualization Criteria |

Yes (1) or No (0) Does it look like the “Naughty Nurse” image? Is there bright lipstick and heavy makeup? Hair perfectly styled/curled? Body exposure showing excessive skin or emphasizing body parts like breasts and/or buttocks? Suggestive or provocative poses or pouting lips? Seductive or alluring expressions, such as sultry gazes? Tight-fitting, revealing, or lingerie-like clothing? Note: Because the nurse was attractive or wearing makeup did NOT indicate sexualization without these additional factors. |

|

Professionalism |

Yes (1) or No (2) Does it portray a professional looking nurse with uniform well-groomed, and setting or background is appropriate, realistic? |

|

Facial Expression |

Happy/Smile (1) Serious (2) |

|

Gender |

Male (0) Female (1) |

|

Ethnicity |

Light skin tone/-White (1), Dark skin tone/ Black (2), Asian (3), Mixed or medium skin tone or Latino (4) |

|

Attire |

Modern scrubs (1), Old fashioned uniform or dress/skirt (2), Lab coat (3) Other (4) |

|

Hat/Cap |

Yes (1) No (2) |

|

Stethoscope Present |

Yes (1) or No (2) |

|

Realistic Stethoscope |

Yes realistic (1) No warped (2) Not present (3) |

|

Name Badge |

Yes (1) No (2) |

|

Symbols |

Medical Red Cross symbol (1) No symbols (2) Other (3) |

|

Setting/Background |

Neutral/none (1) Hospital/clinical (2) Other (3) |

Results

RQ1: Visual Elements in Generative AI Images of Nurses

The first research question asked was: What are the frequencies of specific recurrent visual elements in AI-generated images of nurses (attire, symbols, settings) that affect the public image of nurses? Of the 288 images in the dataset, ethnicity of nurses as judged by skin tone was 83% light skin tone/white (n = 239), 9.4% dark skin tone/black (n = 27), 3.1% Asian (n = 9), and mixed/medium skin tone 4.5% (n = 13). For gender, 86.5% of the images (n = 249) displayed female nurse, with 13.5% males (n = 39). See also Table 2. Facial expressions of nurses displayed included 37.8% happy, 59.3% serious, and 2.9% unable to tell or masked. Sexualization of nurses highlighting the “Naughty Nurse” stereotype was present in 28.8% of images (n = 83). Of all the images, 7% were deemed to be unprofessional (reasons included oversexualization, unprofessional make up, stains/blood on uniform). See Table 3.

Table 2. Ethnicity and Gender Representation in AI-Generated Nurse Images

|

Characteristic |

Frequency (n) |

Percentage (%) |

|---|---|---|

|

Ethnicity |

||

|

Light skin tone/White |

239 |

83.0 |

|

Dark skin tone/Black |

27 |

9.4 |

|

Asian |

9 |

3.1 |

|

Mixed/Medium skin tone |

13 |

4.5 |

|

Gender |

||

|

Female |

249 |

86.5 |

|

Male |

39 |

13.5 |

Table 3. Facial Expressions and Sexualization in AI-Generated Nurse Images

|

Characteristic |

Frequency (n) |

Percentage (%) |

|---|---|---|

|

Facial Expression |

||

|

Happy |

109 |

37.8 |

|

Serious |

171 |

59.3 |

|

Unable to tell/Masked |

8 |

2.9 |

|

Sexualization |

||

|

"Naughty Nurse" Stereotype |

83 |

28.8 |

|

Unprofessional Images |

20 |

7.0 |

Most images showed nurses with white hats or caps.Analysis of the attire of nurses in the dataset showed: 58.3% wore old fashioned nurse uniforms which included dresses and skirts, 36.5% wore scrubs (the majority were white-colored or blue scrubs), lab coats were present in 2.4%, and 2.7% had unknown other attire. See Table 4. Most images (66.3%) showed nurses with white hats or caps. Stethoscopes were the most common objects noted and were present in 44.8% of images; of those with stethoscopes, 28.5% displayed warped-looking or unrealistic stethoscopes. Name badges were only present in 13.8% of the images of nurses. A red cross (commonly known as the Red Cross symbol) was present in 26.7% of the images and other symbols included shields, pins, crosses, and unknown symbols. See Table 5.

Table 4. Nurse Attire in AI-Generated Images

|

Attire Type |

Frequency (n) |

Percentage (%) |

|---|---|---|

|

Old-fashioned nurse uniforms (dresses/skirts) |

168 |

58.3 |

|

Scrubs |

105 |

36.5 |

|

Lab coat |

7 |

2.4 |

|

Other/Unknown |

8 |

2.7 |

Table 5. Common Objects/Symbols in AI-Generated Nurse Images

|

Object |

Frequency (n) |

Percentage (%) |

|---|---|---|

|

White nurse hat/caps |

191 |

66.3 |

|

Stethoscopes |

129 |

44.8 |

|

Name badges |

40 |

13.8 |

|

Red Cross symbol |

77 |

26.7 |

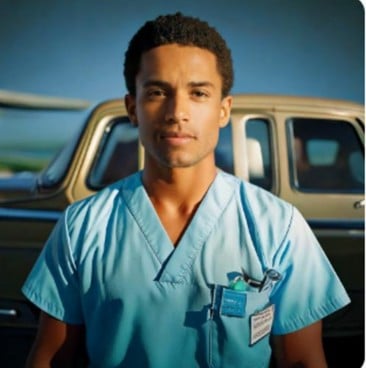

Background settings in the images included: Neutral (60.4%), hospital or clinic (23.9%), and other (15.6%). Other places in the GAI images of nurses included spaceships, airplanes, cars/vans, warehouse hallways, beach, offices, outdoors, and ambulances. See Figure 1 for examples from each of the prompts.

Figure 1. Image Examples from Platforms and Decades

|

Midjourney |

Adobe Firefly |

Image FX |

|---|---|---|

|

1920s |

||

|

|

|

|

1930s |

||

|

|

|

|

1940s |

||

|

|

|

|

1950s |

||

|

|

|

|

1960s |

||

|

|

|

|

1970s |

||

|

|

|

|

1980s |

||

|

|

|

|

1990s |

||

|

|

|

|

2000s |

||

|

|

|

|

2010s |

||

|

|

|

|

Nurse of Today |

||

|

|

|

|

Nurse of The Future |

||

|

|

|

RQ2: Gender, Race, and Stereotypes of Nurses Across Image Generators

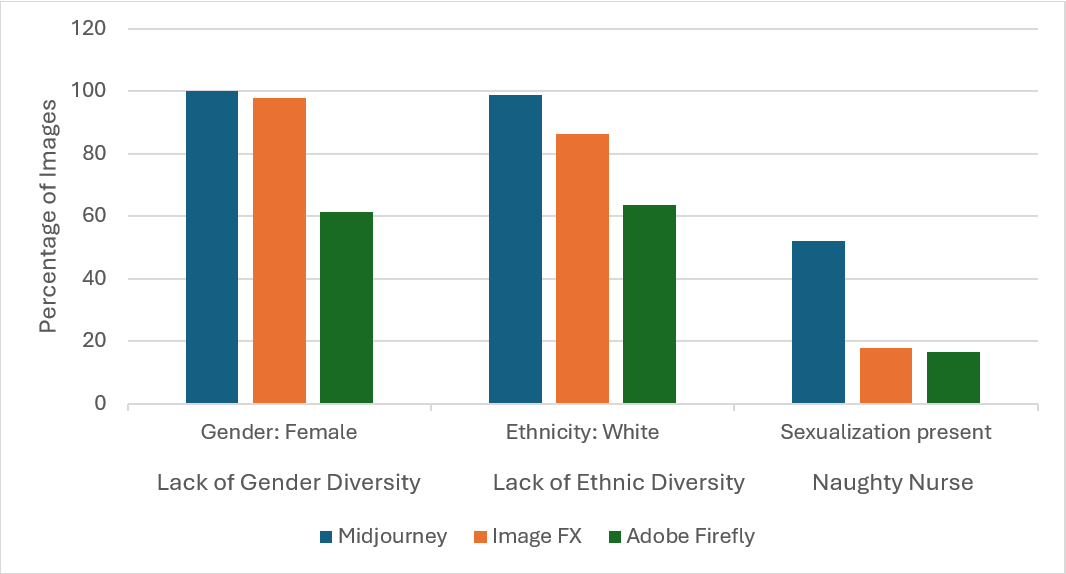

The second research question asked was: How do gender, racial representations, and stereotypical portrayals of nurses in AI-generated images differ across image generator platforms? We compared the three image generators used (Midjourney, Adobe, and Image Fx) regarding their representation of gender diversity, ethnic diversity, and sexualization of nurse images. A two-sided Independent Samples Kruskal-Wallis Test revealed a statistically significant difference in gender across the image generators, H (2) = 76.78, p < 0.001. Adobe displayed more gender diversity (female = 61.5%) than Midjourney (female = 100%, p < 0.001) and ImageFx (female = 97.9%, p < 0.001). There was no difference between Midjourney and ImageFx (p = 0.674).

Welch’s ANOVA demonstrated a statistically significant difference in ethnic diversity across the image generators, F (2, 132.39) = 18.28, p < 0.001. Adobe (non-White = 28.2%) generated ethnic diversity at higher rates than Midjourney (non-White = 1.1%, p < 0.001) and ImageFx (non-White = 11.7%, p < 0.002). There was no statistically significant difference between Midjourney and Image Fx (p = 0.068).

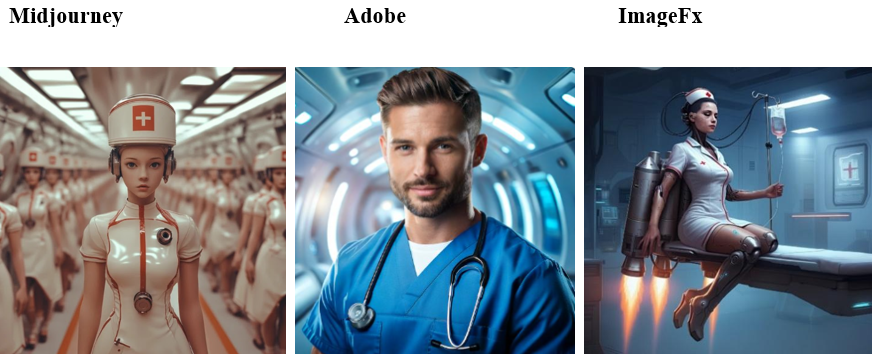

Regarding sexualization, there was a statistically significant difference in sexualization across the image generators, F (2, 187.35) = 18.00, p < 0.001. Midjourney (sexualization = 52.1%) generated sexualization at significantly higher rates than Adobe (sexualization = 16.7%, p < 0.001) and ImageFx (sexualization = 17.7%, p < 0.001). There was no difference between Adobe and ImageFx (p = 1.00). Sexualized images of nurses appeared consistently across all decades, including the "Nurse of the Future" prompt, which portrayed nurses in tight, old-fashioned skirts and hats, sometimes as half-robotic with futuristic space backgrounds (see Figure 2). Table 6 shows a visual representation of the differences between platforms.

Figure 2. Nurse of the Future Image Examples

Table 6. Percentage of Images Between Platforms Displaying Biases & Stereotypes

Discussion

Consistent with most media portrayals of nurses, all three image generators overwhelmingly displayed nurses as female.This study explored the visual representation of nurses across three popular AI-generation platforms to identify recurring visual elements that reflect implicit biases in portrayals of the nurses and nursing profession. Consistent with most media portrayals of nurses, all three image generators overwhelmingly displayed nurses as female (86.5%). This aligns with current research indicating that globally 76.91% of nurses are female (Kharazmi et al., 2023). Although the portrayal of nurses as predominantly female in this dataset reflects current demographics, this representation can inadvertently harm efforts to increase diversity in nursing. By reinforcing the perception of nursing as a "female job" these depictions may discourage potential males from pursuing careers in the field, making them feel excluded or unwelcome. This lack of inclusivity limits diversity and can hinder efforts for the profession to benefit from a broader range of perspectives and skills that a more gender-balanced workforce could provide.

These images created by GAI and social media are important as they perpetuate unrealistic beauty standards and gender biases.When examining the images in the dataset, almost all displayed an idealized, visually pleasing nurse free from imperfections. Nurses were flawless in appearance with slim body frames. These images created by GAI and social media are important as they perpetuate unrealistic beauty standards and gender biases. The upstream implications of this biased imagery may shape social perceptions about the nursing profession even before individuals consider entering the field by reinforcing stereotypes about how nurses are expected to appear (Fernández de Caleya Vázquez & Garrido- Merchán, 2024). If left unchallenged, potential influences of GAI may contribute to long term nursing shortages and hinder efforts to promote inclusivity.

From an ethical perspective, the biases in GAI training data raise concerns as to who controls the public image of nursing and how these portrayals impact recruitment and retention efforts. Some images included nurses wearing personal protective equipment as a standard part of the uniform and these may reflect the public’s post-COVID view of nurses. Yet, the “healthcare heroes” label often assigned to nurses during the pandemic did not present itself in the images (Jackson, 2021). The need for intentional representation is especially critical given that our AI-generated images depicted nurses as predominantly Caucasian/white (83%). Among the platforms examined, Midjourney exhibited the least diversity in skin tone, while Adobe Firefly demonstrated the greatest gender and ethnic diversity in its representations. As the U.S. population becomes increasingly diverse, with racial or ethnic minorities projected to comprise 50% of the population by 2024 (Vespa et al., 2020), it will be important to display more images of nurses with diverse ethnicities within recruitment materials for schools of nursing. Addressing biases within media portrayals of nurses is critical to attract racially diverse nurses who can provide equitable care to a racially diverse nation in all fields of nursing.

Advocacy and participation by nurses in responsible AI development can promote a more accurate and diverse representation of the profession...One way to practically overcome the bias of GAI is to use more specific prompts to delineate the skin tone desired for a nursing image. This strategy can intentionally create more diverse images of nurses which can potentially help recruitment efforts. As large language modules learn from user input and feedback, nurses can utilize GAI to challenge stereotypes and promote fair ethical representations of nurses by actively engaging in content creation (Elyoseph, & Levkovich, 2024). Advocacy and participation by nurses in responsible AI development can promote a more accurate and diverse representation of the profession, helping to reshape the public perception of nursing.

During World War II, nurses' vital contributions briefly altered public perception, but the enduring "angel of mercy" stereotype—rooted in Florence Nightingale’s legacy from the Crimean War—persisted in literature and film. However, this portrayal was later overshadowed by television shows of the 1960s and 1970s, which cemented the 'sexy nurse' stereotype, diminishing the profession’s knowledge and contributions (Thompson, 2014). A landmark study by Kalisch & Kalisch (1982) found that during this period, 73% of movie films portrayed nurses as sexual objects. Additionally, the recurring media theme of nurse-physician relationships presents an unprofessional and hypersexualized image of nurses in the workplace (Godsey et al., 2020). The sexualization of nurses remains a persistent issue, deeply embedded in media portrayals and the sex/pornography industry (Ferns & Chojnacka, 2005). Sexualization of nurses appeared in 28.8% of the images analyzed in this study, often in form-fitting white scrubs and old-fashioned tight skirts. These depictions often also included sultry expressions, provocative gazes, heavy make-up, red lipstick, open mouths, and visible cleavage, perpetuating persistent and demeaning stereotypes of nurses.

Kalisch and Kalisch (1987), prolific researchers on the portrayal of nurses in the media, have long warned about how these misrepresentations can shape public perceptions of nurses and their role in society. This warning remains highly relevant today, particularly with the resurgence of white scrubs with some studies indicating that patients prefer white uniforms for nurses and rate this color to be more professional looking (Albert et al., 2008; Porr et al., 2014). In this study, nurses wearing white were more likely to exhibit the traits related to sexualization discussed above. White scrubs, often linked to notions of sexual purity and morality, may serve to reinforce outdated stereotypes that undermine the role of nurses as autonomous, highly educated professionals (Elder, 2022). While white scrubs may be a symbol of cleanliness and a recognizable nursing attire, employers may disregard concerns about the sheerness of this color and its historical association with sexualization. To combat the oversexualization of nurses, the nursing profession must actively challenge harmful stereotypes of sexualized nurses through media representation, education, and advocacy. Professional nursing organizations and groups like www.truthaboutnursing.org advocate for more accurate portrayals of nurses in television, film, and advertising while promoting a campaign that highlights the skill, intelligence, and dedication of nurses.

The lack of representation of diverse nursing roles can negate advances in nursing practice by misinforming the public on the full scope of advanced practice nurses, nurse educators, and nurse leaders.Another important finding in this study was that only 2.4% of images portrayed nurses wearing lab coats, attire traditionally associated with physicians and advanced-level healthcare providers. Picturing most nurses wearing scrubs (and not lab coats) can reinforce misperceptions of nurses as unskilled, unknowledgeable, and lower-level caregivers. This is concerning as in 2020, over 17% of RNs held a Masters or Doctoral level degree. The lack of representation of diverse nursing roles can negate advances in nursing practice by misinforming the public on the full scope of advanced practice nurses, nurse educators, and nurse leaders. Furthermore, the equipment and skills of nurses exceed the use of a stethoscope, the most common symbol portrayed by GAI (also pictured incorrectly in 28.5% of images with stethoscopes). One limitation of GAI images is that they often portray nurses in outdated ways, such as white hats (in 66.3% of images) with Red Cross symbols commonly depicted (26.7% of images) but rarely seen on contemporary nursing uniforms.

Those using GAI to create images of nurses should be aware of these biases and focus on crafting, testing, and utilizing precise prompts to generate more inclusive and professional images.Those using GAI to create images of nurses should be aware of these biases and focus on crafting, testing, and utilizing precise prompts to generate more inclusive and professional images. Educating large language models through the use of more detailed and deliberate prompts can intentionally create an increased number of images available for public display that feature racial and gender diversity. As a profession, it is important to unite and insist on dispelling antiquated historical stereotypes for accurate media portrayals of contemporary nurses that reflect diversity and autonomy. Implementing policies and educational initiatives that enhance gender and racial representation within the nursing profession—and in the images that depict it—will play a crucial role to recruit, retain, and support a diverse workforce, ultimately ensuring a more sustainable and inclusive future for nursing.

Conclusion

By challenging and correcting these distorted portrayals, we can foster a more inclusive and accurate representation of nursing...This study exposed the implicit biases and historical stereotypes in AI-generated images of nurses, which can reinforce outdated notions of gender, race, and the sexualization of nurses. While the depictions of race and gender align with current demographics, such portrayals can reinforce narrow perceptions of nursing as a female-only, racially homogeneous, and hypersexualized profession. Biased representations of nurses in images can undermine efforts to attract a diverse workforce and elevate the public image of the profession. Addressing these biases requires a more intentional approach to the use of generative AI tools, including the implementation of specific prompts that emphasize diversity, professionalism, and the evolving role of nurses in healthcare. By challenging and correcting these distorted portrayals, we can foster a more inclusive and accurate representation of nursing—one that supports diversity, strengthens recruitment, and ensures the profession reflects the needs of an increasingly diverse society.

Authors

Janet Reed, PhD, RN, CMSRN

Email: JReed56@kent.edu

ORCID ID: https://orcid.org/0000-0003-3905-4156

Janet Reed is an Assistant Professor of Nursing at Kent State University specializing in medical-surgical nursing and geriatrics. She holds an ADN, BSN, MSN in Nursing Education, and PhD in Educational Technology. Her research examines the effects of technology including generative AI and simulation in nursing. For 12 years she has taught senior nursing students about professional issues affecting the nursing profession.

Tracy M. Dodson, PhD, RN

Email: tdodson4@kent.edu

ORCID ID: https://orcid.org/0000-0003-4499-8143

Tracy Dodson began her career as an LPN, has earned BSN and MSN degrees, and a PhD in Curriculum and Instruction with a focus on Education Technologies. Tracy teaches in several classrooms, including Professional Issues in Nursing and Evidence Based Practice, both courses describe potential issues nurses face in today’s healthcare climate. Tracy also researches the use of generative AI to prepare students for nursing simulation.

Amy B. Petrinec, PhD, RN

Email: apetrine@kent.edu

ORCID ID: https://orcid.org/0000-0002-3267-3651

Amy Petrinec is the Interim Associate Dean of Research at the Kent State University College of Nursing. She has a nursing background in critical care and palliative/end-of-life care with research focused on caregiver and older adult symptom science and health-related quality outcomes. In this article, Amy contributed expertise in statistical and data analysis.

Delaney Tennant, BSN graduate

Email: dtennan2@kent.edu

Delaney Tennant is a recent BSN graduate at Kent State University where she worked as a research assistant and served as the Vice-President of the Student Nurses Association. She gained experience on several research projects using AI and contributed her skills in image generation for this project.

Jenna Chmelik, BSN, RN

Email: jchmelik@kent.edu

Jenna Chmelik is a BSN graduate from Capital University. She is currently enrolled in the MSN program at Kent State University where she is studying to become a nurse educator. She has worked as a research assistant for the past year on several research projects using AI with Dr. Reed.

Shawnna Cripple, BSN graduate

Email: scrippl1@kent.edu

Shawnna Cripple is a recent BSN graduate at Kent State University where she worked as a research assistant and completed a Summer Undergraduate Research Experience with Dr. Reed. She assisted with image creation and management for this project.

References

Albert, N. M., Wocial, L., Meyer, K. H., Na, J., & Trochelman, K. (2008). Impact of nurses' uniforms on patient and family perceptions of nurse professionalism. Applied Nursing Research, 21(4), 181–190. https://doi.org/10.1016/j.apnr.2007.04.008

Blau, A., Sela, Y., & Grinberg, K. (2023). Public perceptions and attitudes on the image of nursing in the wake of COVID-19. International Journal of Environmental Research and Public Health, 20(6), 4717. https://doi.org/10.3390/ijerph20064717

Byrne, A. L., Mulvogue, J., Adhikari, S., & Cutmore, E. (2024). Discriminative and exploitive stereotypes: Artificial intelligence generated images of aged care nurses and the impacts on recruitment and retention. Nursing Inquiry, 31(3), e12651. https://doi.org//10.1111/nin.12651

Caliskan, A. (2023, August). Artificial intelligence, bias, and ethics. Proceedings of the Thirty-Second International Joint Conference on Artificial Intelligence. Pages 7007-7013. https://doi.org/10.24963/ijcai.2023/799

Carroll, D. M., & Harris, T. J. (2024). Can we finally move the needle on diversity, equity, and inclusion in Nursing? Nursing Administration Quarterly, 48(1), 65–70. https://doi.org/10.1097/NAQ.0000000000000617

Currie, G., Hewis, J., & Ebbs, P. (2024a). Gender bias in text-to-image generative artificial intelligence depiction of Australian paramedics and first responders. Australasian Emergency Care. https://doi.org/10.1016/j.auec.2024.11.003

Currie, G., Chandra, C., & Kiat, H. (2024b). Gender bias in text-to-image generative artificial intelligence when representing cardiologists. Information, 15(10), 594. https://doi.org/10.3390/info15100594

Edwards-Maddox, S., Reid, A., & Quintana, D. M. (2022). Ethical implications of implicit bias in nursing education. Teaching and Learning in Nursing, 17(4), 441-445. https://doi.org/10.1016/j.teln.2022.04.003

Elder, R. (2022). White suits and kangaroo kills: Making men's careers in American nursing. Gender & History, 34(1), 153-178. https://doi.org/10.1111/1468-0424.12526

Elyoseph, Z., & Levkovich, I. (2024). Comparing the perspectives of generative AI, mental health experts, and the general public on schizophrenia recovery: Case vignette study. JMIR Mental Health, 11, Article e53043. https://doi.org/10.2196/53043

Fernández de Caleya Vázquez, A., & Garrido-Merchán, E. C. (2024). A taxonomy of the biases of the images created by generative artificial intelligence. Computers and Society, arXiv-2407.01556. https://doi.org/10.48550/arXiv.2407.01556

Ferns, T., & Chojnacka, I. (2005). Angels and swingers, matrons and sinners: Nursing stereotypes. British Journal of Nursing, 14(19), 1028–1032. https://doi.org/10.12968/bjon.2005.14.19.19947

Godsey, J.A., Houghton, D.M. & Hayes, T. (2020) Registered nurse perceptions of factors contributing to the inconsistent brand image of the nursing profession. Nursing Outlook, 68(6), 808–821. https://doi.org/10.1016/j.outlook.2020.06.005

González, H., Errasti‐Ibarrondo, B., Iraizoz‐Iraizoz, A., & Choperena, A. (2023). The image of nursing in the media: A scoping review. International Nursing Review, 70(3), 425-443. https://doi.org/10.1111/inr.12833

Hopkins, S. (2021). Nurse Margaret “Hot Lips” Houlihan as gendered hate object. The Nurse in Popular Media: Critical Essays, 69. Jefferson, United States. McFarland & Company, Inc.

Jackson, J. (2021). Supporting nurses’ recovery during and following the COVID-19 pandemic. Nursing Standard, 36(3), 31-34. https://doi.org/10.7748/ns.2021.e11661

Kalisch, P.A. & Kalisch, B.J. (1982.) The image of the nurse in motion pictures. American Journal of Nursing, 82(04), 605–611.

Kalisch, P.A., & Kalisch, K.J. (1987). The Changing Image of the Nurse. California: Addison-Wesley Publishing Company.

Kharazmi, E., Bordbar, N., Bordbar, S. (2023). Distribution of nursing workforce in the world using Gini coefficient. BMC Nursing, 22(1), 151. https://doi.org/10.1186/s12912-023-01313-w

López-Verdugo, M., et al. (2021) Social image of nursing. An integrative review about a yet unknown profession. Nursing Reports, 11(2), 460–474. https://doi.org/10.3390/nursrep11020043

Mohammed, S., Peter, E., Killackey, T. & Maciver, J. (2021) The “nurse as hero” discourse in the COVID-19 pandemic: A poststructural discourse analysis. International Journal of Nursing Studies, 117, 103887. https://doi.org/10.1016/j.ijnurstu.2021.103887

Neuendorf, K. A. (2002). The content analysis guidebook. SAGE Publications, Inc.

Paskaleva, T., Biyanka, T., Dimitar, S. (2020). Professional status of men in nursing. Journal of IMAB, 26(1), 3029-3033. https://doi.org/10.5272/jimab.2020261.3029

Porr, C., Dawe, D., Lewis, N., Meadus, R. J., Snow, N., & Didham, P. (2014). Patient perception of contemporary nurse attire: A pilot study. International Journal of Nursing Practice, 20(2), 149–155. https://doi.org/10.1111/ijn.12160

Quinn, B. G., O'Donnell, S., & Thompson, D. (2022). Gender diversity in nursing: Time to think again. Nursing Management, 29(2), 20–24. https://doi.org/10.7748/nm.2021.e2010

Reed, J. M., Alterio, B., Coblenz, H., O’Lear, T., & Metz, T. (2023). AI image generation as a teaching strategy in nursing. Journal of Interactive Learning Research. Special issue on artificial intelligence. 34(2), 369-399. Waynesville, NC: Association for the Advancement of Computing in Education (AACE). https://www.learntechlib.org/primary/p/222304/

Reed, J. M. (2024). Students’ fears of the nursing profession through AI-generated artistic images. Western Journal of Nursing Research, 46 (1), suppl, pages 1S-57S. https://doi.org/10.1177/01939459241233357

Riffe, D., Lacy, S., Watson, B. R., & Lovejoy, J. (2014). Analyzing media messages: Using quantitative content analysis in research (3rd ed.). Routledge.

Samala, A.D., Rawas, S., Wang, T., Reed, J.M., Kim, J., Howards, N. & Ertz, M. (2024). Unveiling the landscape of generative artificial intelligence in education: A comprehensive taxonomy of applications, challenges, and future prospects. Education and Information Technologies. https://doi.org/10.1007/s10639-024-12936-0

Smiley, R., Reid, M., & Martin, B. (2024). The registered nurse workforce: Examining nurses’ practice patterns, workloads, and burnout by race and ethnicity. Journal of Nursing Regulation, 15(1), 65-79. https://doi.org/10.1016/S2155-8256(24)00030-9

Smiley, R. A., Ruttinger, C., Oliveira, C. M., et al. (2021). The 2020 national nursing workforce survey. Journal of Nursing Regulation, 12(1), S1–S96. https://doi.org/10.1016/S2155-8256(21)00027-2

Stokes‐Parish, J., Elliott, R., Rolls, K., & Massey, D. (2020). Angels and heroes: The unintended consequence of the hero narrative. Journal of Nursing Scholarship, 52(5), 462. https://doi.org/10.1111/jnu.12591

Summers, S., & Summers, H. (2014). Saving lives: Why the media's portrayal of nurses puts us all at risk (2nd ed.). Oxford University Press.

Teresa-Morales, C., Rodríguez-Pérez, M., Araujo-Hernández, M., & Feria-Ramírez, C. (2022). Current stereotypes associated with nursing and nursing professionals: An integrative review. International Journal of Environmental Research and Public Health, 19(13), 7640. https://doi.org/10.3390/ijerph19137640

Thompson, H. (2014). The evolution of the nurse stereotype via postcards: From drunk to saint to sexpot to modern medical professional. Smithsonian Magazine. https://www.smithsonianmag.com/history/evolution-nurse-stereotype-postcards-drunk-saint-sexpot-modern-medical-professional-180952725/

van der Cingel, M., & Brouwer, J. (2021). What makes a nurse today? A debate on the nursing professional identity and its need for change. Nursing Philosophy, 22(2), e12343. https://doi.org/10.1111/nup.12343

Vespa, J., Medina, L., & Armstrong, D. M. (2020). Demographic turning points for the United States: Population projections for 2020 to 2060. In Current population reports, P25-1144. Washington, DC: U.S. Census Bureau.

Yavaş, G., & Özerli, A. N. (2023). The public image of nursing during the COVID‐19 pandemic: A cross‐sectional study. International Nursing Review. https://doi.org/10.1111/inr.12922

Zhou, L., Godsey, J. A., Kallmeyer, R., Hayes, T., & Cai, E. (2024). Public perceptions of the brand image of nursing: Cross-cultural differences between the United States and China. Nursing Outlook, 72(5), 102220. https://doi.org/10.1016/j.outlook.2024.102220