Adoption and integration of artificial intelligence (AI) is on the rise, present in almost every aspect of public life and clinical care. Whether care workers embrace AI or not, they are increasingly impacted by these technologies, as is the very nature of care work. While the integration and use of some forms of AI may improve care quality and outcomes, AI use can also cause harm. As with any tool used in care work, adverse impacts of AI must be recognized, addressed, and to the greatest extent possible, prevented. Where possible, harm inflicted through the use of AI tools in care work and on care workers must also be repaired. Care workers, including nurses, have a social contract to support the health and healing of the persons they accompany in care, including each other. ‘Digital defense’ represents efforts to prevent, protect, and heal from harms associated with technologies that incorporate big data and artificial intelligence. Here I present an adapted framework for understanding relationships between care workers’ collective well-being and documented harms of AI. I then propose a series of digital defense tactics and collective action strategies care workers can use to protect each other and heal from potential and actual adverse consequences of AI in healthcare.

Key Words: artificial intelligence, digital defense, occupational safety, civil rights, ethics, algorithmic justice

Artificial intelligence (AI) is an ambiguous concept whose definition continues to evolve in relation to the state of the science and public perception (Katz, 2020). In its most general sense, AI refers to computers performing tasks that resemble those which might otherwise require human intelligence (McGrow, 2019). In its most general sense, AI refers to computers performing tasks that resemble those which might otherwise require human intelligenceOften AI is used synonymously with terms like “machine learning,” a field of inquiry in mathematics and engineering sciences whose roots date back to the 1950s (Crawford, 2021). AI technologies encompass multiple sub-fields, methodologies, and technical innovations including but not limited to computer vision, neural networks, deep learning, generative AI, natural language processing, and robotics (Arora et al., 2024). Table 1 provides a glossary with definitions and further description of these technologies and other common AI terms.

Table 1. Glossary of Terms

|

Term |

Definition |

|---|---|

|

Administrative Violence |

Administrative norms of legal systems that create structured insecurity and (mal)distribute life chances across targeted populations, even when official rules of nondiscrimination against those populations are in place (Spade, 2015). |

|

AI Virtual Nurse |

A label created by tech companies for online chatbots that rely on generative AI agents to simulate multi-turn conversations with an avatar made to look like a clinician. Such chatbots (available from vendors such as Hippocratic AI and Google) have been advertised as able to assist in routine tasks (e.g., reviewing common drug toxicities, providing lifestyle advice, or identifying lab values outside of normal limits). At this time, the tech cannot diagnose or legally prescribe medications in the United States (Mukherjee et al., 2024). |

|

Algorithm |

A sequence of steps for solving a problem, much like a recipe. In digital terms, it is a set of computational or mathematical formulas that use data as their main ingredient, transforming these data (input) into desired outputs (Lewis et al., 2018). |

|

Algorithmic Bias |

Systematic and repeated errors in a computer-based system that result in disparate or inequitable outcomes. Algorithmic bias can arise from bias in the data, prejudice in the design of algorithms, and socio-technical factors such as structural and social determinants like racism, ableism, and sexism (What Is Algorithmic Bias?, n.d.). |

|

Ambient Intelligence |

A pervasive computing environment wherein devices unobtrusively embedded in the space can interact with and automatically respond to human users by detecting human movement, voice, or other cues. A prominent example of this is voice-activated assistants like Siri or Alexa (Ambient Intelligence for Healthcare (AmI4HC), n.d.). |

|

Automated Acuity Scoring |

Automatic assignment of a score to a patient based on an algorithm designed to assess the severity of their condition. This score is used to estimate how much care the patient will require and priority in relation to other patients (Fitzpatrick et al., 1992). |

|

Automated Worker Surveillance & Management (AWSM) |

Systems used by employers to automatically monitor, manage, and evaluate workers. These applications can pose risks to workers, including to their health and safety, equal employment opportunities, privacy, ability to meet critical needs, access to workplace accommodations, and exercise of workplace and labor rights, such as the right to form or join a labor union (Request for Information; Automated Worker Surveillance and Management, 2023). |

|

Big Data |

Datasets too large to process on a personal computer. They are much larger, are created or added to more quickly, are more varied in structures, and are stored on large, cloud-based storage systems (Big Data, n.d.). |

|

Black Box |

An electronic device or AI model whose design and internal mechanisms are hidden from or mysterious to the user, on purpose (Definition of BLACK BOX, 2025). |

|

ChatGPT |

A type of generative artificial intelligence chatbot developed by the company OpenAI and launched in 2022. ChatGPT uses a large language model (LLM) to generate human-like conversational responses and enables users to refine and steer a conversation towards a desired length, format, style, level of detail, and language (“ChatGPT,” 2025). |

|

Clinical Decision Support Systems (CDSS) |

Computer systems designed to support clinicians in the complex decision-making processes. First introduced in the 1980s, CDSS are now commonly embedded in electronic health records (EHRs) and other digitized clinical workflows (Sutton et al., 2020). |

|

Coded Bias |

A term coined by sociologist Ruha Benjamin that denotes results of tech designers’ and adopters’ imagined objectivity and indifference to social reality. Coded bias is embedded directly into datasets and design, resulting in technologies that inadvertently or intentionally systematically discriminate and promote anti-Blackness (Benjamin, 2020). |

|

Cognitive Ergonomics |

A branch of human factors and ergonomics focused on supporting the cognitive processes of individuals within a system. In healthcare, where the cognitive burden on clinicians is often high, cognitive ergonomics seeks to establish practices and systems that decrease extraneous cognitive load and support pertinent cognitive processes (Li-Wang et al., n.d.). |

|

Computer Vision |

Simulation of biological vision using computers and related equipment to obtain three-dimensional information from pictures or videos and subsequently interpret or identify those images (Gao et al., 2020). |

|

Data Center |

An industrial computing service infrastructure involving one or more facilities that houses computer systems and their associated components. Equipment in data centers must be cooled, often using fresh water to remove the excess heat. A typical data center contains tens to hundreds of thousands of servers. Data centers are essential to the function of many AI and big data systems, including cloud computing and large language models that form the basis for generative AI (Dai et al., 2017). |

|

Deep Learning |

A subset of machine learning that attempts to mimic functions of the brain by stacking artificial neurons into layers and "training" them to process data, in order to perform tasks such as image classification. The "deep" in deep learning refers to the number of layers of neurons in the network. (“Deep Learning,” 2025). |

|

Digital Defense |

In cybersecurity, digital defense represents efforts to prevent or thwart cyber-attacks. In community settings, digital defense is a set of relational practices designed to protect oneself and one’s neighbors from harmful consequences of data-driven systems and surveillance (Lewis et al., 2018). |

|

Generative AI |

A type of machine-learning model designed to generate more objects that look like the data it was “trained” on. Outputs can include text, images, or the next step in a process, like a sequence of amino acids in a protein chain. Generative AI architectures vary and can include general adversarial networks, diffusion models, and large language models – the basis for technologies like ChatGPT and DeepSeek (Zewe, 2023). |

|

Invisible Labor |

Coined by sociologist Arlene Kaplan Daniels in 1987, “invisible work” is a term that refers to necessary activity that largely happens in the home or private settings and is almost always unpaid or underpaid. While it requires knowledge and skill, it is rarely recognized or valued as work. Invisible labor is often gendered, classed, and racialized. Common examples include domestic labor and informal caregiving (Frizzell, 2023). |

|

Large Language Model (LLM) |

A type of AI “trained” on a massive amount of text to learn the rules of language. LLMs can be used to translate, summarize, and generate text (Okerlund et al., n.d.). |

|

Machine Learning (ML) |

A sub-field of AI that involves allowing computer models to “learn” to perform tasks that emulate functions of human intelligence such as description, prediction or prescription. Machine learning starts with a large amount of data.

|

|

Matrix of Domination |

Coined by sociologist Patricia Hill Collins in her book Black Feminist Thought to describe four inter-related domains (structural, disciplinary, hegemonic, and interpersonal) that organize power relations in society to shape human action and maintain the status quo. The matrix of domination includes systems of oppression such as white supremacy, settler colonialism, and patriarchy (Hill Collins, 1990). |

|

Model Card |

First proposed by computer scientist Margaret Mitchell, model cards are short documents accompanying trained machine learning models that provide benchmarked evaluation of the model across a variety of conditions, such as when the model is applied to different cultural, demographic, phenotypic and intersectional groups. Much like a medication label, model cards explain the context in which AI models are intended to be used. They also include information about how the AI model was evaluated, and other relevant information (Mitchell et al., 2019). |

|

Natural Language Processing (NLP) |

A sub-field of AI, computer science and linguistics that deals with how computers understand, process, and manipulate human languages (Natural Language Processing, n.d.). |

|

Repair Work |

Repair work is the labor of integrating a new and possibly disruptive technology into the human complexities of a professional context. It can take many forms, such as emotional labor and rendering expert judgment. This day-to-day labor is necessary, consistently undervalued in comparison to the more visible labor of “innovation”, frequently gendered and classed, and often rendered invisible (Elish & Watkins, 2020). |

|

Taylorism |

Named after the American engineer Frederick Winslow Taylor and popularized by his book on industrial management published in 1911, Taylorism is a management approach designed to increase efficiency and productivity. Workflows and work processes are systematically examined and relentlessly “optimized” with the goal of increasing efficiency while reducing costs (Rutledge, 2016). |

These days it is nearly impossible to go to work or school, attend a conference, read the newspaper, or even open one’s email without some mention of AI. While not a new concept, AI development and adoption has accelerated and scaled exponentially across almost every sector of society, including healthcare (Ronquillo et al., 2021). While not a new concept, AI development and adoption has accelerated and scaled exponentially across almost every sector of society, including healthcareAI technologies are now used in automated clinical decision support, voice-aided documentation, continuous patient monitoring, robotic assistants, chatbots embedded in the patient portal, diagnostic aides, and even to power so-called “AI nurses” (“$9 AI ‘Nurses’?,” 2024).

Though AI for healthcare has faced significant vocal opposition from across many of the care work disciplines, such resistance has faltered in recent years. An increasingly dominant narrative sounds something like, “AI is here to stay so we might as well learn to live with it!” (Park et al., 2024; Swan, 2021). This sentiment is reflected across many facets of leadership for care disciplines such as nursing, from the proliferation of AI in the titles of keynotes (this author included) to a veritable algal bloom of independent AI task forces, each operating in its own silo (American Academy of Nursing [AAN], n.d.; American Nurses Association [ANA] Center for Ethics and Human Rights, 2022; Cary et al., 2024; International Council of Nurses, 2023; National Academy of Medicine et al., 2021; Ronquillo et al., 2021). Naysayers and dissidents have been painted as either ignorant of AI’s potential or anti-progress: their arguments – at best – irrelevant in the face of the relentless technological expansion (Higgins, 2024; Kwok, 2023; Leong, 2024).

The COVID-19 pandemic has only accelerated this narrative shift. With many health employers culling their workforces and relying on temporary contracts to fill the gaps left by high-turnover The COVID-19 pandemic has only accelerated this narrative shiftand mass exodus of more experienced staff, over-burdened and under-supported care workers who remain are understandably grasping for solutions that may improve their situation and keep the ship afloat (Onque, 2025; Ranen & Bhojwani, 2020; Swan, 2021; Tahir, 2025). Major media outlets covering this transformation of the healthcare workforce have simultaneously trumpeted tech industry messaging regarding the potential of AI to “revolutionize” healthcare, optimize workflows, eliminate health disparities, and provide robust solutions to what are, at their roots, structural vulnerabilities created by decades of disinvestment by industry leaders and the United States (U.S.) government.

Though news outlets have insisted they are simply reporting on the issues, social psychologists have given such strategic messaging another name: “manufacturing consent” (Lippmann, 1922). As health systems flail, private industry has rushed to capitalize on the chaos through the sale and expansion of their proprietary technologies, many of which rely on AI and big data (Klein, 2023). Massive corporations (e.g., Alphabet, Apple, Amazon, Meta, and OpenAI) which own and control much of the global infrastructure necessary to run deep learning (DL) applications, large language models (LLMs) powering generative AI, and other forms of AI for healthcare. These corporations are now engaged in a bidding war for who will win government and private industry contracts to solve America’s healthcare crisis (Pearl, 2022; Ross & Palmer, 2022), even as rank and file care workers remain wary of what such changes will entail ([National Nurses United], 2025).

Assumptions and Purpose of Discussion

This article assumes the following foundational assumptions: (a) That the application of AI to healthcare, like all other technologies that have come before it - from the scalpel to acetaminophen - is capable of causing harm and therefore will cause harm in some contexts, even when applied appropriately; (b) That such harm will occur in patterns that may be predictable in some situations and appear random in others; (c) As with the cases of technologies like the surgical scalpel and acetaminophen, existence of harm does not erase possible benefits of this technology, which may exist for some groups and in certain contexts; (d) Because the structures of oppression shape AI-related harms, efforts to counter those harms will need to include structural solutions that address power imbalances; (e) Ergo, while there is a universe of possible diverse applications of AI, all of which may impact care workers in unique ways, effective efforts to safeguard care workers’ collective well-being against harm from one type of AI may help to shield against others as well. Posing the question “Is AI helpful or harmful?” oversimplifies the complexity of just what AI is and how it operates in a socio-technical society.

The purpose of this article is not to argue whether AI is either beneficial or harmful for care workers and the communities they serve (though there is certainly a growing body of evidence reporting various impacts). In many cases, answers to this question will depend both on the specific AI application and context as well as whose perspective is centered in the framing of what constitutes a benefit or harm. AI is such a broad and rapidly-evolving set of ideas and technologies as to defy simple definitions or concretization, by design (Katz, 2020). Posing the question “Is AI helpful or harmful?” oversimplifies the complexity of just what AI is and how it operates in a socio-technical society. The form of this query presents the dilemma as a sort of zero-sum game with binary outcomes, where the answer (i.e., pro or con) will inform a singular decision maker who can then wave a magic wand to either adopt or decline the AI in question.

This expectation is neither realistic nor respectful of the ways in which power and positionality shape not only the answers to questions about AI in care work, but the very questions themselves (Narayanan & Kapoor, 2024). Therefore, rather than continue to litigate the “does AI help or harm?” debate, the purpose of this article is to assess established harms of some forms of artificial intelligence on care workers and their collective well-being. The discussion first presents a framework to describe dimensions of care worker collective well-being impacted by documented AI-related harms. Following this, actions that care workers can take – individually and collectively – to protect each themselves and each other against such harms are explored. This practice is also known as digital defense.

Use of Artificial Intelligence in Care Work

There is evidence to support potential use cases for consentful application of certain types of AI in the performance of care work. For example, machine learning may facilitate early identification and outreach to patients who would benefit from access to important services such as medical interpretation (Barwise et al., 2023). Deep learning models used by wearable sensors like smart watches or garments may aid in continuous monitoring of vital signs or other parameters outside of the acute care setting, allowing for early detection of health events that require immediate intervention (Hasan & Ahmed, 2024; Yu & Zhang, 2024). There is evidence to support potential use cases for consentful application of certain types of AI in the performance of care work.

Though a recent systematic review found that no AI models to date have outperformed human radiologists, some forms of AI like computer vision may eventually help to improve diagnostic accuracy of scans to detect cancerous tumors, shifting the nature of care work required to support those affected (Seo et al., 2024). Robot-assisted surgery has been a gold standard of care for procedures like radical prostatectomy for many years now (Chopra et al., 2012). Scientists are currently investigating uses of AI technologies like natural language processing to address health inequities, such as reducing the use of racist and stigmatizing language in healthcare documentation (Beach, n.d.; Topaz, n.d.). While most efforts to systematically evaluate long-term impacts of these projects are still in the early stages of development, some outcomes are promising (Ronquillo et al., 2021).

The presence of evidence-informed, positive use cases for AI does not diminish the importance of understanding actual and potential negative outcomes of this technology. Robust and growing literature outlines risks and harms associated with AI technologies and the resources they require (Benjamin, 2020; McQuillan, 2023; Ricaurte, 2022; Walker et al., 2023). AI harms are disproportionately distributed to groups less likely to be represented among decision-makers for AI adoption, including but not limited to front-line workers (Garofalo et al., 2023; Tahir, 2025). AI for healthcare is also not transparent or obvious when it is in operation; an issue regulators have yet to fully address (U.S. Food and Drug Administration [FDA], 2025; van Genderen et al., 2025). The presence of evidence-informed, positive use cases for AI does not diminish the importance of understanding actual and potential negative outcomes of this technology.

Even as disciplinary leaders, industry representatives, and academics repeat the AI-is-here-so-get-used-to-it mantra, rank and file nurses continue to express distress at the imposition of this technology by employers without their express consent (Garofalo et al., 2023; NNU, 2025). A recent survey of over 2300 California nurses conducted by NNU indicated that 60% of respondents disagreed with the statement: I trust my employer will implement AI with patient safety as the first priority (NNU, 2023). This survey also revealed that nearly a third of nurses were unable to alter AI-automated acuity scores and other patient assessments in the electronic health record, even though these evaluations differed from the nurse’s expert clinical judgment nearly half of the time (NNU, 2023).

While those less proximate to direct care continue to expound on benefits of AI and shareholders reap record profits from AI big tech and start-ups, ...rank and file nurses continue to express distress at the imposition of this technology by employers without their express consentdirect care workers must scramble to hold the line at a time when many care centers are under-staffed, under-resourced, and facing skyrocketing demand. The care workforce has been broken, and many are just trying to get by (Brangham et al., 2024; Neumann & Bookey-Bassett, 2024; Sarfati et al., n.d.). Care workers have expressed that the view AI is often imposed, rather than consentfully negotiated, leaving them to figure out on their own how to make it work (Elish & Watkins, 2020; Frennert et al., 2023).

While technologists, informaticists, and some policy makers continue experiments to create AI that is more ethical and able to actually deliver on any of the numerous promises made for its revolutionary potential, care workers must ensure delivery of safe and effective care (Ronquillo et al., 2021). This includes addressing profound disruptions created by rapid AI adoption which involves various types of physical, intellectual, and emotional labor termed “repair work” (Elish & Watkins, 2020). Care workers must also remain vigilant for potential harms such as algorithmic bias (i.e., discriminatory outcomes baked into automated systems), which the proprietary “black box” nature of many algorithms can make difficult to discern (Benjamin, 2020). Care workers have expressed that the view AI is often imposed, rather than consentfully negotiated...

Even where the nature and functioning of AI is more transparent, such as through the use of AI model cards to explain the form and functioning of an algorithm, care workers have not been given tools to directly address or prevent obvious harms of AI (Fox, 2025a; 2025b). Though the most recent version of the American Association of Colleges of Nursing ([AACN], 2021) Essentials for nursing education mandates that nurses possess competencies in technologies used to deliver nursing care, access to specialized training in AI for care workers remains limited and can be cost-prohibitive. Most efforts to address AI harms in healthcare focus on improving patient outcomes by eliminating algorithmic bias. Far less attention has been paid to promoting the collective well-being of the care workers who use and are increasingly surveilled by AI technologies.

Considerable energy has been invested in efforts to reduce or mitigate biases present in AI systems, in pursuit of “algorithmic fairness” or “ethical” and “responsible” forms of AI (Cary et al., 2024; Ricaurte, 2022; Vo et al., 2023). The Most efforts to address AI harms in healthcare focus on improving patient outcomes by eliminating algorithmic bias. underlying assumption of this work mirrors that of disciplinary leadership: AI for healthcare is here to stay, so we may as well learn how to audit and address the biases therein. It is unlikely such coded biases (Benjamin, 2020) can ever be completely removed from these systems, given that even a perfect dataset will still be influenced by structures of oppression (e.g., racism and colonial empire), which shape not only the generation and analysis of data, but the very existence of data in the first place (D’Ignazio & Klein, 2020). Proposed solutions such as clinical algorithm audits and shared governance structures focus almost exclusively on safety and patient outcomes, usually through the lens of ‘health disparities’ (Obermeyer et al., 2019). While an important strategy to prevent and address AI harms, these efforts fail to recognize impacts of AI on care workers and their collective well-being, including ways in which AI use alters the performance of care work and the care worker role.

Despite extensive discussions of AI across almost every discipline, care workers have been given few options or tools to protect ourselves against real and potential harms of the rapid imposition of these technologies on our work. In June 2024, the ...efforts fail to recognize impacts of AI on care workers and their collective well-being, including ways in which AI use alters the performance of care work and the care worker role.U.S. Department of Labor (DOL) posted guidelines to bolster labor protections and worker autonomy in the context of AI in the workplace, inspired by previous guidance from the Biden White House Executive Order on responsible development of AI (U.S. Department of Labor, 2024). However, as of February 14, 2025 and following installation of the second Trump administration, the best practices roadmap had been erased from the DOL website. This follows a recent Executive Order countering policy efforts by the previous administration to place some guardrails on AI development and implementation (The White House, 2025). Without systemic approaches like strong federal labor protections and robust enforcement, care workers must look to each other and other sources for help. Now, and for the foreseeable future, care workers in the United States will need strategies and resources to support digital defense.

Digital Defense

The concept of digital defense is not new. It originates from the combination of the adjective ‘digital’ (composed of electronic data) with the noun ‘defense’ (means of protecting oneself, one's team, or another) (“Defense,” 2025; “Digital,” 2025). In cybersecurity, digital defense is a service that focuses on protecting an individual or organization from external threats such as malware, phishing attacks, data breaches, and identity theft. In the community setting, digital defense means collective strategies to protect community members against digital harms, including those inflicted by AI technologies used for state and corporate surveillance (Lewis et al., 2018). Private cybersecurity industry focuses primarily on development and sale of technological products, such as anti-malware software, dual-factor authentication, firewalls, and encryption to prevent human malfeasance. Community-based digital defense is different and considers how groups impacted by AI technologies and other forms of big data can take individual and collective action to protect themselves from harmful data systems, including those produced by industry and state actors. In this sense, digital defense leaves room for communities to imagine and articulate visions for new futures free of harm and oppression, where every person has access to what they need to live and thrive (Benjamin, 2022). Community-based digital defense is different and considers how groups impacted by AI technologies and other forms of big data can take individual and collective action to protect themselves from harmful data systems...

AI Harms and Care Workers’ Collective Well-being

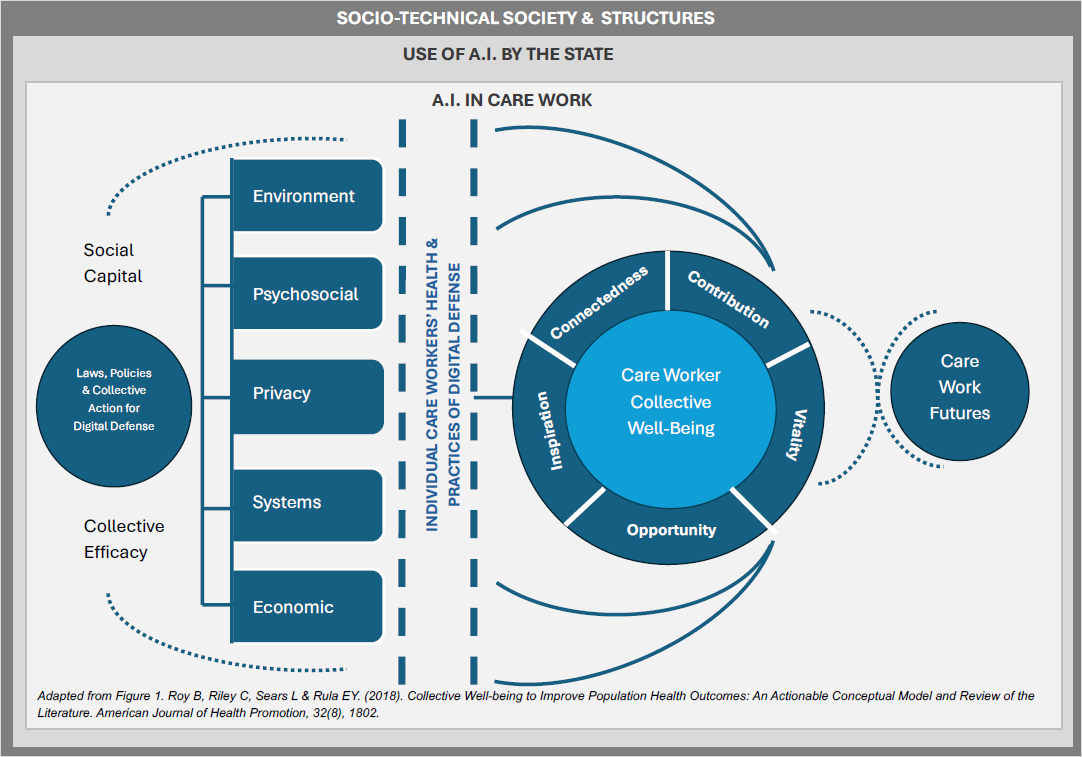

Care workers’ collective well-being can be conceptualized as a group-level construct, similar to concepts like social cohesion (connectedness or cooperativity of a group) or collective efficacy (a group’s perception of their ability to succeed at a task) (Roy et al., 2018). The concept incorporates individual care workers’ perceptions of their own lives and their profession, and is thus also dependent on individuals’ well-being, health behaviors, and social actions. This association is bi-directional: the properties of the group also influence the individual, and vice versa. Here I have adapted Roy et al. (2018)’s actionable model of collective well-being to care workers operating in the context of the rapid and proliferating adoption of various forms of artificial intelligence for healthcare. This adaptation includes applications of AI by the state and employers to care worker surveillance. According to the original model, collective well-being can be defined along five dimensions: Vitality, Opportunity, Connectedness, Contribution and Inspiration (Roy et al., 2018). Figure 1 presents this model adapted to the situation of nurses and other care workers operating in the context of AI and surveillant big data, mapping documented harms of AI onto dimensions of collective well-being.

Figure 1. Care Worker Collective Well-being and Digital Defense Against A.I.-related Harm

The concept incorporates individual care workers’ perceptions of their own lives and their profession, and is thus also dependent on individuals’ well-being, health behaviors, and social actions.The adapted model of collective well-being assumes that the well-being of individual care workers contributes to and reciprocally interacts with the whole. The model recognizes some care workers will be disproportionately harmed both by technologies like AI and other structures of oppression that shape society and the human experience. Dr. Patricia Hill Collins termed this the “matrix of domination” (Hill Collins, 1990). These include care workers systematically harmed by structures such as racism and white supremacy; care workers who are transgender or gender-diverse; neurodiverse care workers and those who are chronically ill or living with disabilities; care workers who are poor or subject to financial precarity; care workers who are not U.S. citizens, especially recent immigrants and “DREAMERS”; care workers who are lesbian, gay, bisexual or otherwise identify as queer; the very old; the very young; and anyone who is pregnant or capable of pregnancy.

The Model and AI

Vitality

Vitality is defined as care workers’ self-perceived overall health, inclusive of positive functioning and emotional health, positive and negative affect, optimism, and the ability to control and regulate emotions. Research recently published in the journal Nature indicates that AI adoption can significantly increase worker stress, and this stress functions as a mediator between AI and increased levels of burnout (Kim & Lee, 2024). Claire Elish and Elizabeth Watkins added contextual detail to possible mechanics of this relationship through their ethnographic investigation of clinical AI for sepsis detection (Elish & Watkins, 2020). Their report, Repairing Innovation, outlines multiple ways in which disruption created by the introduction of the new technology demanded that care workers engage in acts of repair, as they worked to maintain patient safety and clinical care while simultaneously negotiating the human complexities of responding to the algorithm’s frequent alerts, a majority of which were false positives. Frequent alarms contribute to alarm fatigue; a persistent challenge impacting the safety of patient care and clinician ‘burn out’ (Nyarko et al., 2024). Care workers reported this work of alerting other members of the clinical staff, negotiating with attending physicians, and coordinating responses in relation to their usual workload was under-recognized and undervalued, even as the technology offered no choice but to maintain the system (Elish & Watkins, 2020). Research recently published in the journal Nature indicates that AI adoption can significantly increase worker stress...

Situations such as this have led many care workers to report feelings of powerlessness (Lora & Foran, 2024; Yigit & Acikgoz, 2024). This is reflected in one recent survey of more than 2000 registered nurses in California, a majority of whom indicated they had no confidence in the commitment of their institutions to protect patient safety when adopting AI, even as the AI was being actively integrated into their workplaces (NNU, 2025). AI harms worker vitality through loss of privacy, as employers adopt surveillant technologies to continuously monitor and optimize worker performance through automated worker surveillance and management systems (AWSM) (Dillard‐Wright, 2019; Pfau, n.d.). Recent proposals for a “unique nurse identifier” to systematically track the billable value of nursing care, while aimed at leveling the playing field with physician providers who can bill for their services, is yet another step towards continuous surveillance of nurses’ labor by institutions and payers who have already demonstrated a willingness to sacrifice care workers’ collective well-being and quality of care in exchange for higher profit margins (Jenkins et al., 2024; Kelly, 2024).

Unlike a new diagnosis or prescription order wherein a single provider takes responsibility for a decision made at a specific point in time, nursing care is iterative, relational, and team based. Representing the nature and value of nursing care by attaching unique nurse identifiers to a list of itemized services reduces nursing care to a series of discrete tasks – erasing higher-level functions not tied to singular patients (e.g., staff supervision and coordination of care). Digitizing nursing in this way runs the risk of oversimplifying the complexity of what nursing care is, how and when it is delivered, and by who (Jenkins et al., 2024). In the absence of transparent and enforceable guarantees from payers, institutions, and regulators regarding how such individual nurse-level data, once generated, will actually be used, nurses may trade our remaining privacy and professional autonomy for no gains at all, or worse, weaponization of these data by shareholders and tech industries with no social contract to care for the public. ...attaching unique nurse identifiers to a list of itemized services reduces nursing care to a series of discrete tasks – erasing higher-level functions not tied to singular patients...

Beyond surveillance in the workplace, care workers can be harmed when AI technology enables forms of state surveillance that disproportionately impact marginalized and hyper surveilled groups, as is the case for many migrant populations; Black and brown communities; Indigenous people; trans and gender-diverse people and anyone identifies under the LGBTQIA+ umbrella; persons living with disabilities; pregnant people and those capable of pregnancy; women and girls; non-citizens; the very young; the very old; and those who are poor or without stable housing (Electronic Privacy Information Center, 2025; Sinnreich & Gilbert, 2024). Use of AI by the state also impacts care worker vitality through various forms of administrative violence, which is defined as coercive and inequitable outcomes achieved through legal means, such as when institutional policies bar a care worker from employment or accessing necessary services based on factors like race or gender (McQuillan, 2023; Ricaurte, 2022). A recent example of administrative violence is the Trump administration executive order (EO) on January 27, 2025, which bans all transgender persons from serving in the U.S. military, including healthcare personnel, despite Constitutional civil rights protections against sex-based discrimination. Under this EO, any evidence of past attempts to access gender-affirming care or diagnoses of ‘gender dysphoria’ can be used to justify the separation of current service members and deny employment to new recruits. Such evidence, whether or not it is accurate, can be easily gathered through AI-mediated surveillance of service members’ digital communications, health records, and official documents. The EO also weaponizes records kept by healthcare workers administering care to trans service members, even if those records contain information as seemingly benign as standard demographic data. As of this writing, the EO has been temporarily blocked by U.S. District Judge Ana C. Reyes, though the future of trans U.S. military personnel remains uncertain (Moore, 2025).

Finally, care worker vitality is diminished when AI technologies and the infrastructure required to maintain them, such as the high volume water and power necessary for data centers to support high capacity computing, harm the environment (van der Vern, 2025). AI researcher Kate Crawford (2024) writes in Nature that planetary costs of AI, such as the generative AI behind chatbots and platforms like ChatGPT-4 and DeepSeek, are soaring. Data centers that power this technology require tremendous amounts of increasingly scarce fresh water to keep processors cool, in addition to large amounts of electricity. Data centers that power this technology require tremendous amounts of increasingly scarce fresh water to keep processors cool, in addition to large amounts of electricity.One preprint article indicates that while many of these numbers are deliberately kept secret, the global demand for water alone to support AI could be half of that for the entire United Kingdom by 2027 (Li et al., 2025). This still does not account for the massive amounts of precious metals and other non-renewable resources required to be mined and processed in order to manufacture the powerful chips and computer hardware that will run AI technology, nor the fractal chains of human labor such production depends on; much of this work is risky, under-waged, or unpaid (Crawford, 2021). As the climate crisis accelerates, care workers are likely to be subjected to more virulent and unpredictable weather patterns and associated disasters (e.g., wildfires), while demands for care due to worsening air quality, higher levels of vector-borne pathogens, and injury simultaneously increase demands for their labor.

Opportunity

Opportunity represents care workers’ cognitive appraisals of their financial situations, including perceptions of economic security or financial stress, socioeconomic mobility, and ability to achieve professional and life goals. AI tech represents a threat to workers when it lowers their wages and job security (Kelly, 2024). An example of this is Nvidia and Hippocratic AI’s 2024 product announcement for their “AI nurse.” The “AI nurse” is an avatar chatbot advertised as capable of replacing higher-wage nurses for only $9 an hour. Of course the “AI nurse” is neither a nurse nor a replacement for primary nursing functions such as holistic patient assessment or direct care, but the pitch for a cheap and automated replacement “available AI tech represents a threat to workers when it lowers their wages and job security24/7” was appealing enough to the industry to attract significant new investment, helping to hurtle Hippocratic AI to “unicorn status” with at least $278 million in funding received as of last month (Landi, 2025; Temkin, 2025).

A February 2025 report by the U.S. Federal Trade Commission (FTC) noted potential threats associated with continued consolidation of power in the AI industry across just a handful of companies, particularly AI developers OpenAI and Anthropic and cloud computing service providers Alphabet, Amazon, and Microsoft (FTC Issues Staff Report on AI Partnerships & Investments Study, 2025). These corporations already exercise out-sized power over tech policy and regulation in the United States. Several of their CEOs were present on the platform beside the U.S. president at the inauguration in January (Swenson, 2025). As AI is incorporated into more public and private services, those at the helm will likely continue to amass even greater wealth and power over the domains in which AI operates. Labor experts predict this will likely result in less professional autonomy and fewer opportunities for wealth-building among the working classes (Steyne, 2025).

AI is also powering a new gig economy for care workers, including a slew of apps collectively known as “Uber for nursing” (Wells & Spilda, 2024). These apps function similar to a taxi service, but instead of rides they advertise open shifts in local health systems. Care workers first log on, where they can see a list of open contracts and the wages advertised. They can then bid on these shifts much like one would for an auction on eBay, with contracts more likely to be awarded to workers of similar skillsets who bid lower than their counterparts, further depressing wages. Most apps make clear that by taking on these roles on random units, nurses also agree to assume a certain degree of professional liability if anything goes wrong (Wells & Spilda, 2024). Gig workers assume additional risks, lack paid time off or employee benefits like health insurance, and often experience little or no professional recourse in situations where they face safety hazards or discrimination on the job. Gig nursing apps do not provide malpractice insurance and care workers who use them are asked to waive their rights to participate in class action lawsuits. AI is also powering a new gig economy for care workers...

Care workers operating in clinical settings with rapid turnover and a high proportion of temporary or ‘gig’ workers, enabled in part or in whole by such AI technology, may find their career aspirations thwarted by the ensuing chaos. Of concern is a lack of consistent mentorship and institutional investment in their on-going professional development. Though apps require ‘gig’ workers to present proof of licensure and other job requirements, automated vetting of preparation and skills may result in the hiring of care workers who are not adequately prepared or qualified for their roles (Wells & Spilda, 2024).

Loss of professional autonomy has also been reported in the context of AI, further impacting care workers’ sense of opportunity (Hickok & Maslej, 2023). When care workers do choose to pursue new professional opportunities, use of discriminatory forms of AI in application and hiring processes – also pervasive in the health professions - may hinder these aspirations (Wilson & Caliskan, 2024). One recent investigation of such an AI-driven resume screener using Massive Text Loss of professional autonomy has also been reported in the context of AI, further impacting care workers’ sense of opportunityEmbedding (MTE) revealed significant bias in favor of resumes with white-sounding names. Working from a representative pool, the resume screener selected women’s resumes only 11% of the time and failed to select resumes associated with Black men in up to 100% of test cases, replicating real world biases in the employment sector (Wilson & Caliskan, 2024). Finally, recent surveys indicate that AI imposition impacts care workers’ sense of self-efficacy to pursue change in the face of automation over which they have been given no control or opportunity for recourse (NNU, 2023).

Connectedness

Care worker connectedness in an AI era reflects the degree to which they experience supportive and reciprocal relationships with secure attachments, and includes dimensions of social acceptance (positive attitudes towards others) and social integration (feeling a sense of belonging in their workplace and community) (Roy et al., 2018). For example, when AI automated alert systems are introduced to care work, it can cause profound disruption by changing workflows and introducing new forms of labor, such as managing false alarms (Elish & Watkins, 2020). Although numerous claims have been made regarding the potential of AI technologies to lighten burdens on care workers (e.g., the performance of tasks that take them away from the proverbial bedside), some research to date has found the converse to be true. For instance, one study considered the introduction of three e-Health applications, two of which included continuous patient monitoring This type of labor remains largely invisible at the organizational level... applications and one of which involved digital patient intake to primary care. The findings demonstrated that nurses experienced added responsibility and complexity in their jobs which was additive and parallel to other work they had already been assigned (Frennert et al., 2023). Nurses were also called to reassure patients about the data and information made available in the applications, and to clarify how the applications worked.

This type of labor remains largely invisible at the organizational level, even as nurses reported less time to provide care at the bedside, less time communicating directly with colleagues (now moderated via the apps), and more sedentary work activities conducted in isolation as a result. Nurses also observed that patients occasionally provided exaggerated responses to the e-Health system, in hopes that this would expedite a care response. This left nurses to interpret and discern which information in the system was accurate, and when to act, adding further cognitive burdens to their workloads (Frennert et al., 2023). Cognitive ergonomics is an emerging field of study that addresses the mental stress and frustration humans experience when forced to interact with robots (Gerlich, 2025; Gualtieri et al., 2022). Introduction of AI can negatively impact care program workers sense of trust, particularly when the technology amplifies inequities through algorithmic bias, or is used to surveil worker performance in ways that invade privacy or restrict autonomy (Vo et al., 2023). Cognitive ergonomics ... addresses the mental stress and frustration humans experience when forced to interact with robots

Contribution

The contribution domain of collective well-being among care workers involves a sense of purpose attributed to engagement in their work and community (Roy et al., 2018). Care workers with high levels of contribution feel a sense of positive self-regard; workers feel the work is meaningful and makes a difference in the world. In the face of growing applications of AI for healthcare, care workers have expressed concerns about the “task-ification” of their roles, as some forms of automation appear to reduce the art and science of care to a series of discrete operations (Mitchell, 2019). Taylorism and movement towards professionalization of the national healthcare workforce followed the introduction of factory work models to hospitals in the early to mid-1900s resulted in hierarchies of work whereupon physicians were typically positioned at the top, with other care workers placed at descending levels of perceived skill and value (Rutledge, 2016; Wagner, 1980). In the 1990s, hospital staffing models were further streamlined through management-centric approaches (e.g., Six Sigma, Lean Start-up) that further displaced power over institutional decision-making from direct care workers to administrators and consultants hired to optimize workflows and maximize worker efficiency (Crotty, 2010). ...care workers have expressed concerns about the “task-ification” of their roles...

The COVID-19 pandemic further stressed the care workforce, as dwindling staff and increasing care demands dramatically raised patient to care worker ratios in many settings. Increased administrative burdens, such as management of constant and larger streams of required data documentation and follow-up in big data-driven environments, have been associated with decreased proportion of care worker time engaged in acts of direct care (Frennert et al., 2023; Melnick et al., 2021). AI-assisted documentation aides and ambient intelligence have been deployed across some healthcare settings, with some research reporting associations with marginal reductions in documentation burden. Also, the majority of these tools are tailored for use by physician providers (Preiksaitis et al., 2023; Tierney et al., 2024).

...the majority of these tools are tailored for use by physician providersCare workers laboring in conditions where they are unable to deliver the desired levels or quality of care, such as circumstances where AI has been introduced in lieu of safe staffing, may experience moral distress (McDonald, 2024; Pavuluri et al., 2024; Vo et al., 2023). The same is true of situations where a care worker perceives that AI technology introduces bias or causes harm and feels powerless to intervene, as some recent surveys support (NNU, 2025). Care workers who perform unacknowledged and undervalued labor to maintain these technological systems, such as the repair work outlined in Elish and Watkins (2020) report, may also feel that their sense of contribution is threatened.

Inspiration

The inspiration domain represents goal-striving, creativity, intrinsic motivation, and hope among care workers in the AI context (Roy et al., 2018). Inspiration can be increased by engagement in relationships and activities that are motivating and valued. Recent research on the topic of AI and creativity has demonstrated that use of some forms of generative AI like ChatGPT is associated with reductions in the diversity of collective content (Doshi & Hauser, 2024) and individual capacity for critical thought (Lee et al., 2025). Inspiration can be increased by engagement in relationships and activities that are motivating and valued.

Generative AI companies like OpenAI currently face numerous lawsuits and complaints from creators, including care workers, that their intellectual property has been scraped from the internet and used without permission to train new AI models (Alter & Harris, 2023; Noveck et al., 2023; Parvini & O’Brien, 2024). Though authors frequently sign copyright permission to journals for their work upon publication, most did not anticipate a world with generative AI (Romer, 2023). Major publishers of health content, such as Routledge and Taylor & Francis, have recently signed agreements with companies like Microsoft to allow their publications to be used as AI training data. While such possibilities may be covered in author contracts, aggrieved authors have also demonstrated that AI models trained on their copywritten works sometimes reproduce those works verbatim as “new” output, without appropriate attribution. This legally ambiguous space poses new dilemmas in terms of defining the limits of creators’ rights and copyright protections.

Fear of speaking up or expressing authentic thought in the workplace can stifle creativity and reduce inspiration.When AI is used to surveil care worker activities or communications, they may experience less autonomy and safety when engaging in acts of refusal or dissent (Pfau, n.d.). Fear of speaking up or expressing authentic thought in the workplace can stifle creativity and reduce inspiration. Care workers unable to bring their full and authentic selves to the workplace may become discouraged, or experience professional stagnation. Such exclusions can lead to premature departure from the care workforce, which further harms other care workers’ collective well-being.

How Can Care Workers Practice Digital Defense?

Care workers have options and resources when it comes to practicing digital defense to protect themselves, their colleagues, and those they accompany in care.Care workers have options and resources when it comes to practicing digital defense to protect themselves, their colleagues, and those they accompany in care. Any given care workers’ ability to take action depends on numerous factors, including that person’s positionality vis-à-vis systems of power, the context in which they live, work, and play (including planetary health and conditions of the environment, psychosocial conditions, privacy, systems, and economic conditions), and capacity of care workers as a whole. This capacity may include their social capital, collective efficacy, and the status of cooperative efforts and coalition-building to promote care workers’ collective well-being and digital defense.

...individual practices of digital defense – particularly when performed in concert with others as part of collective action - can still make a difference.While not a replacement for urgently-needed structural transformation of the health and tech industries, government regulations, and other policy reforms, individual practices of digital defense – particularly when performed in concert with others as part of collective action - can still make a difference. Table 2 outlines actions that care workers can take, as individuals and collectively. The table includes currently available resources to support these efforts to shape care futures. Hyperlinks to resources outlined in this table are also in the process of being archived on the Nursing Mutual Aid website, available at: https://nursingmutualaid.squarespace.com

Table 2. Care Workers’ Digital Defense Toolkit

|

Care Workers Can*… |

||

|---|---|---|

|

Action |

Explanation |

Resources to Support |

|

Find Your People and Connect |

Institutions have inertia that acts to preserve the status quo. As a single individual, speaking up can be risky and leave one feeling exposed. That is why it is important to find others, either in your organization or in care work and community organizations, who share your vision for care futures that promote care workers’ collective well-being. |

|

|

Educate Yourself about AI |

Care workers play a critical role in patient education regarding technologies impacting their care. While much of the AI operating in the clinical sphere remains opaque, it is important to separate realities of AI technologies from AI hype. |

|

|

Continually Assess AI Technology Alignment with Your Values and Professional Ethics |

AI technologies are diverse and their form and functions will continue to evolve. This poses a challenge for care workers who must continually re-assess the degree to which these technologies align with their own values and professional ethics. A technology deemed “ethical” today may operate differently tomorrow. Care workers have a social contract with the public to practice ethically and therefore must continually re-assess the degree to which technologies shaping their work are ethical. |

|

|

Know Your Rights |

A variety of laws and policies define care workers’ rights, both as private individuals and as laborers. These include the right to safe working conditions, non-discrimination based on categories such as race or gender, and fair pay. The U.S. does not have a Federal law protecting against data-based discrimination, but several laws have been passed at the state and local levels. These are hard-won rights. Knowing them gives you greater power to recognize when your rights are at risk and push back. |

|

|

Protect Your Privacy |

While laws like the Health Insurance Portability and Accountability Act (HIPAA) protect certain types of health information shared in the context of healthcare visits, the U.S. lacks robust and universal data privacy laws. Data brokers buy and sell our personal data regularly, including sensitive health information collected through platforms such as social media, online stores, and even patient portals. The onus of privacy protection falls on individuals. Care workers engaged in activism, mutual aid projects, or highly politicized forms of care work such as provision of reproductive healthcare including abortion care, gender-affirming care, harm reduction, or care for persons who are incarcerated or criminalized, may be at even greater risk for targeting by surveillance and data breaches. Care workers from groups disproportionately impacted by state surveillance such as non-citizens, Black and brown communities and other communities of color, Muslim people and other non-Christian groups, transgender and gender-diverse individuals, persons who receive forms of state assistance, those who are pregnant or capable of pregnancy, persons residing in institutional settings, and persons residing in neighborhoods with increased police or presence of federal agents, are also at risk. |

|

|

Protect the Privacy of Others, Including Those in Your Care |

Care workers including nurses are one of the largest contingents of data workers in the world. Our documentation in the electronic health record significantly impacts patient privacy and safety, especially in the presence of automation and AI systems. As some identities and forms of care are increasingly surveilled and even criminalized, care workers must ensure our actions and the records we keep do not expose those in our care to further risk of harm from state or institutional surveillance. |

|

|

Ask Good Questions |

Care workers possess unique and important insights into the nature of care work and the constellation of factors that shape care outcomes. Care workers are positioned to ask questions about AI technologies that other groups may not even be aware to ask. Good questions are also informed by good information about what is actually occurring. While much of the commercially-available AI is proprietary, public institutions who choose to use it are subject to Freedom of Information Act (FOIA) requests. |

Sample questions:

Learn to file a Freedom of Information Act (FOIA) request:

|

|

Lead or Participate in Research, Translation and Quality Improvement |

Care workers can conduct or participate in original research to assess the utility, efficacy, and limitations of AI technologies used in care work. They can develop new measures and new datasets to capture information that has not been prioritized by other groups, such as records of whether AI implementation delivers on promises of reducing care work burdens or increasing actual time engaged in delivery of direct care to individual clients. Care workers can use knowledge from research studies to lead or participate in translational or quality improvement projects to systematically improve their collective well-being and care. |

|

|

Connect with Journalists |

Journalists provide an important outlet for care workers to share first-hand knowledge and stories illustrating the role of the care worker, the nature of care work, the unmet needs of those we accompany in care, and impacts of AI tech. The Woodhull Revisited report revealed nurses remain under-cited in major media outlets. Many journalists have encrypted email accounts and anonymous tip lines care workers can use when fears of institutional reprisals might otherwise keep them from speaking out. During the COVID-19 pandemic, such means became a critical source of public information that helped shape policy. |

|

|

Talk to Legislators and Policy Makers |

Policy makers and legislators have a responsibility to protect their constituents’ interests. They are also the ones who shape and vote on laws that govern technologies like AI. These individuals may not be well-informed about the realities of care work, the care worker role, or the use and impacts of AI, even as they make decisions that shape all of these things. Therefore, they are usually grateful for insights from the persons impacted by these decisions. |

|

|

Dispel and Correct AI Misinformation and Disinformation |

Misinformation is false or inaccurate information, getting the facts wrong. Disinformation is false information which is deliberately intended to mislead—intentionally misstating the facts. Both abound in today’s internet culture and the AI hype cycle, adding to feelings of uncertainty, confusion, and fear. Care workers have credibility in their communities, who look to us for accurate information. To maintain this trust, we must work to dispel and correct both misinformation and disinformation, using reliable sources. |

Read up on evidence-informed strategies to correct and dispel misinformation and disinformation, such as:

|

|

Reject Unethical Tech |

Recent surveys indicate care workers are often given little choice about the technologies applied to their work. However, care workers retain agency over decisions about when and how to use some types of AI, including in their lives outside of work. For example, if so-called “Uber for nursing” gig work apps are having a negative impact on team morale and care workers’ collective well-being, should care workers continue to use them? |

Check out and share examples of on-going and successful efforts to reject unethical tech, such as:

|

|

Engage in Acts of Refusal |

When a piece of technology such as AI causes harm to care workers or those we accompany in care, or when AI tech is contraindicated based on one’s professional ethics, care workers may decide to engage in acts of refusal. Refusal is a long-standing practice in labor justice movements. Such refusals may entail consequences for the care worker, such as reprimands, disciplinary proceedings, or loss of employment. But such consequences do not erase refusal as a possibility for action in the digital defense toolkit. |

First, connect with fellow care workers and other constituencies adversely impacted by unethical or harmful technology, such as labor unions and patient advocates. Collective action is not only more likely to be successful, it can also serve to better protect individuals participating from adverse consequences of refusal. Check out statements of Refusal, such as:

|

|

Report AI-related Harm & “Near Misses” |

Care workers have a duty to report AI-related harm and “near misses”, when these occur. Reporting provides an audit trail of what scholar Sara Ahmed calls, “complaint”. While an individual report (or even several reports) may not lead to immediate change, a single report can tip the balance of complaint from stagnation to action. Either way, documenting and reporting harm remains a critical form of professional witness and form of care praxis. |

Know your rights to protection as a whistleblower, such as:

|

|

Engage with Mutual Aid Efforts |

Care workers have long engaged in acts of community care and mutual aid to meet needs not otherwise met by the state or institutions. Mutual aid is reciprocal, rather than charity-based, and deepens ties within communities. When governments and institutions fail to support care workers and communities, mutual aid is a survival strategy. |

Learn about and share the history of mutual aid, such as:

Check out resources such as:

|

|

Maintain a Radical Imagination for Care and Care Work Futures |

Much AI tech to date has been presented as a fait accompli, with use cases and impacts framed as inevitable outcomes of innovation and technological progress, whether or not those outcomes truly serve care workers or patients. However, care workers do not have to accept the status quo as it is presented to us. One of the great ironies of AI like machine learning and generative AI, is that it “creates” by relentless repeating the past. By contrast, human imagination is a powerful tool for creating change. Once we imagine the types of worlds we need, we can work towards building them. |

Draw inspiration from sources such as:

Curate spaces for creative praxis and co-visioning new and more just futures for care work and our communities |

*Individual care workers’ ability to engage in these actions is dependent on many factors including social identity and positionality vis-à-vis systems of power; specific contexts in which they live, work and play; and laws, policies and collective actions impacting conditions (economic, systems, psychosocial, environment, and privacy).

Directions for Policy and Collective Action

Civil rights organizations (e.g., the American Civil Liberties Union, the Electronic Frontier Foundation, and the Center for Democracy and Technology) have been on the front lines of legislative efforts at the state and federal levels to safeguard care workers’ rights when it comes to technologies like AI and big data. Prior successes on this front have included successful passage of data privacy laws in several states and release of the Biden White House Office of Science and Technology (n.d.) Policy, “Blueprint for an AI Bill of Rights.” This blueprint included a number of AI-related protections, such as: Safe and effective systems; algorithmic discrimination protections; data privacy; notice and explanation when AI is in use; and human alternatives, consideration, and fallback (White House Office, n.d.). Scholars informing recent policy efforts, like James B. Rule, have provided additional guidelines for how to operationalize such rights. In his book Taking Privacy Seriously, Rule (2024) suggests multiple specific policy interventions including: ban personal decision-making decisions that violate core values; require consent for disclosure of personal data; make personal data use minimal, transparent and trackable; institute a right to resign from personal-decision systems; and create a university property right over commercialization of data about oneself (Rule, 2024). Professional organizations responsible for the stewardship of disciplinary practice have the potential to play an important role in creating conditions for digital defense.

Professional organizations responsible for the stewardship of disciplinary practice have the potential to play an important role in creating conditions for digital defense. However, organizational leaders must be willing to put their resources and sweat equity into advocating for policy reforms, such as the implementation of safe staffing laws and robust protections for whistleblowers who report unethical applications of AI in the workplace. In 2024, the board of the American Academy of Nursing assembled an AI Task Force to examine implications of AI for nursing. The ANA has convened its own AI innovation task force, in addition to releasing a position statement on AI ethics (ANA Center for Ethics and Human Rights, 2022).

Some of the most vocal advocacy for care workers’ collective well-being in relation to AI has come from labor unions, such as National Nurses United. NNU has released a Nurses and Patients’ Bill of Rights: Guiding Principles for AI Justice in Nursing and Health Care (NNU, n.d.). This statement addresses a number of considerations outlined in the model in Figure 1, including use of AI in automated worker surveillance and management (AWSM) and clinical decision support systems (CDSS). Within the bill of rights, they demand nurses’ and patients’ rights to: (a) access high-quality person-to-Some of the most vocal advocacy for care workers’ collective well-being in relation to AI has come from labor unionsperson care; (b) safety, placing the burden of proof on tech developers and industry; (c) privacy; (d) transparency regarding use of AI and data; (e) exercise professional judgment; (f) autonomy including the possibility of refusal of AI, consent and opt-in processes; and (g) collective advocacy for care workers and the persons they accompany in care. Labor unions play an important role in facilitating negotiation of contracts that protect these rights and care worker well-being.

Whether care workers come together and organize through mutual aid efforts, professional associations, labor unions, community-led coalitions, or other means, the power of the collective amplifies the efforts of each individual to improve planetary and environmental conditions, support psychosocial aspects like trust and sense of community, defend privacy, impact systems and institutions including education and social welfare, and improve economic conditions. Collective action along these lines could look like:

- Partnering with communities we accompany in care

- Narrating and translating the function of AI and impacts on care work to the public

- Centering experts from communities disproportionately impacted by AI design decisions

- Negotiating and enforcing robust AI-related contract protections and labor rights

- Resisting oversimplification and “task-ification” of care work

- Organizing support for the passage of common sense AI regulations, safe staffing laws and civil rights legislation

- Influencing the Joint Commission & other accrediting agencies to adopt standards and metrics aligned with patient and care workers’ individual and collective well-being in the context of AI

- Fighting erasure of critical histories of labor and care worker contributions to the development and maintenance of AI and related technologies, including invisible labor demanded by AI systems

- Organizing support for necessary research and metrics

- Protecting care worker privacy and autonomy

- Shaping AI technology and agendas

- Insisting on robust whistleblower protections and material resources to support care workers who engage in acts of reporting and refusal

- Advocating for practices that support planetary health and climate justice

- Fostering creative practice and a radical imagination for more just care futures

Healing from AI-related Harms

In wound care, a thorough assessment, cleansing of the wound and application of appropriate barriers are first steps in protecting the area from further harm as it begins to heal. For the most part, these are ‘top down’ solutions, offering superficial fixes for long-standing systemic issues.Similarly, the actions proposed in this paper align with that level of care and are aimed primarily at identifying, addressing, and where possible, preventing further harm in cases where AI tech negatively impacts care workers or communities we accompany in care. Even without AI in the picture, care workers have a lot to heal from. Care work professions have endured multiple blows in recent decades, including racist and exclusionary practices of its professional organizations, decimation of staffing models under corporate ownership, and the trials of the pandemic. Introduction of AI has been offered as a solution to these wounds, either to optimize workflows or reduce care worker burdens or predict and intervene on clinician “burnout”. For the most part, these are ‘top down’ solutions, offering superficial fixes for long-standing systemic issues. Should some prove successful in stopping the harm at its source, the question remains: how do we heal from the wounds already created? It is unlikely healing will come from the power structures that shaped the issues in the first place. Rather, it will require time, acts of community care, and before it can even begin, an end to imposition of exploitative and harmful technologies. Some advocates have also called for algorithmic reparations (Davis & Williams, 2021).

Strengths and Limitations

The landscape of AI continues to rapidly evolve. This article has addressed how nurses and other care workers can act collectively to protect themselves and those they accompany in care, beyond concepts of algorithmic fairness, to engage with both documented and potential harms associated with burgeoning use of AI technologies. This model of collective well-being was adapted from an established model of collective well-being, but it is as yet untested. The AI harms outlined and their The landscape of AI continues to rapidly evolve.impacts on care workers, individually and collectively, are not necessarily a comprehensive list, nor are they weighted. In most cases, the nature of these relationships is likely to continue to evolve. Furthermore, it is important to acknowledge that acts of digital defense place a significant burden on care workers, who may incur adverse consequences as a result.

Care workers who choose to speak up or engage in refusal may risk discipline, their jobs, and possibly even legal repercussions. Care workers who choose to undertake research or quality improvement projects to assess and address AI-related harms will likely be adding this labor onto that which they already have on their plates. Risks associated with digital defense are disproportionately distributed. Care workers who are already exposed to the higher risk of harm from current systems of oppression and state surveillance are more likely to be negatively impacted for certain acts of digital defense, especially when those acts challenge the power status quo. A contemporary example of this is the increasing number of proposals for “mask bans” in public spaces and at protests, wherein disabled and immunocompromised care workers who choose to exercise their first amendment rights are forced to choose between exposing themselves to infectious threats (by not masking) or carceral consequences (for choosing to mask) (Stanley, 2024). Care workers who choose to speak up or engage in refusal may risk discipline, their jobs, and possibly even legal repercussions.

The author brings their own positionality to this work, which shapes the outputs. This article was written during a period of tremendous political upheaval and uncertainty. In the days following installation of the 2nd Trump administration, much of the Federal guidance for the regulation of AI and AI-related civil rights generated during previous administrations, including a “Blueprint for an AI Bill of Rights,” was rescinded by executive order. The incoming administration has indicated there will be new rules for AI innovation going forward, which aim to severely restrict efforts at federal regulation. Federal actions keep changing the rules – as this is being written the future of agencies principally involved in assessing impacts of AI on care workers and the public is unclear. It is no longer certain that the FDA, Consumer Protection Bureau (CPB), National Science Foundation (NSF), or the National Institutes of Health (NIH) will continue to exist as federal entities with capacities for systematic assessment and enforcement of federal policy. At the same time, the administration has declared transgender identities are not valid and has erased all mentions of transgender people from Federal websites, even for the Stonewall National Monument. As the author is transgender, this means the article you are reading has been written in a state of duress, which no doubt influences the tenor of this writing.

Some may critique this series of arguments as another example of AI naysaying or even sabotage: painting an unnecessarily pessimistic picture of the future of technology for healthcare. As a counter-argument, I offer that rather than being devoid of hope, this discussion represents an effort to imagine and create new and better care futures, wherein technology is used appropriately and consentfully and where everyone is able get their needs met (Lakshmi Piepzna-Sanarasinha, 2018). Even if all AI works exactly as advertised, and all possible algorithmic biases are minimized, if not completely eliminated, with structural reforms put in place to combat the many layers of oppression care workers experience at the intersections of This modeling of care worker collective well-being in the context of AI, and the associated digital defense toolkit, provide foundations for future work...racism, ableism, cis-sexism, and other biases – we would still want and need means by which to establish and maintain care workers’ collective well-being in the context of emergent tech. This modeling of care worker collective well-being in the context of AI, and the associated digital defense toolkit, provide foundations for future work to further these ideas and associated measurement strategies, evaluate the utility and validity of the framework, and refine individual and collective strategies to protect each other and those we accompany in care from harms associated with the introduction of artificial intelligence.

Acknowledgement: I would like to thank the many scholars, digital rights advocates, and accountability partners who contributed to the refinement of these ideas and the resources outlined in this paper, including Nursing Mutual Aid collaborators Dr. Anna Valdez, Dr. Wanda Montalvo, and Dr. Monica McLemore and UMass Health Tech for the People thought partners Dr. Jess Dillard-Wright and Dr. Ravi Karkar. I am also grateful for the reviewers whose feedback greatly improved the quality of the final article.

Author

Rae Walker, PhD, RN

Email: R.Walker@UMass.edu

ORCID ID: https://orcid.org/0000-0001-7146-5137

Rachel (“Rae”) Walker is an AAAS Invention Ambassador, UMass Nursing PhD Program Director, and co-founder of Health Tech for the People, a transdisciplinary research collaborative focused on building capacity to engage with tech ethics and issues of power involved in the design of health technologies. They are a registered nurse and former Peace Corps volunteer who completed their nursing BS, PhD, postdoctoral fellowship, and certificates in Health Inequities research and Nursing Education at Johns Hopkins University School of Nursing, in addition to a BA in English and BS in Biology at the University of Virginia. They have served as a Principal Investigator and collaborator on research involving multiple types of artificial intelligence, including applications involving machine learning, computer vision, and natural language processing. They have advised the White House Office of Science and Technology Policy and Federal advisory committees on health tech-related matters. Their advocacy for design justice and more just invention ecosystems has been featured on numerous podcasts, the TEDx stage, and in magazines such as Forbes, Scientific American, Science and on NPR.

References

$9 AI “nurses”? Inside the NVIDIA-Hippocratic AI collaboration. (2024, April 2). MedTech Pulse. https://www.medtechpulse.com/article/insight/ai-nurses